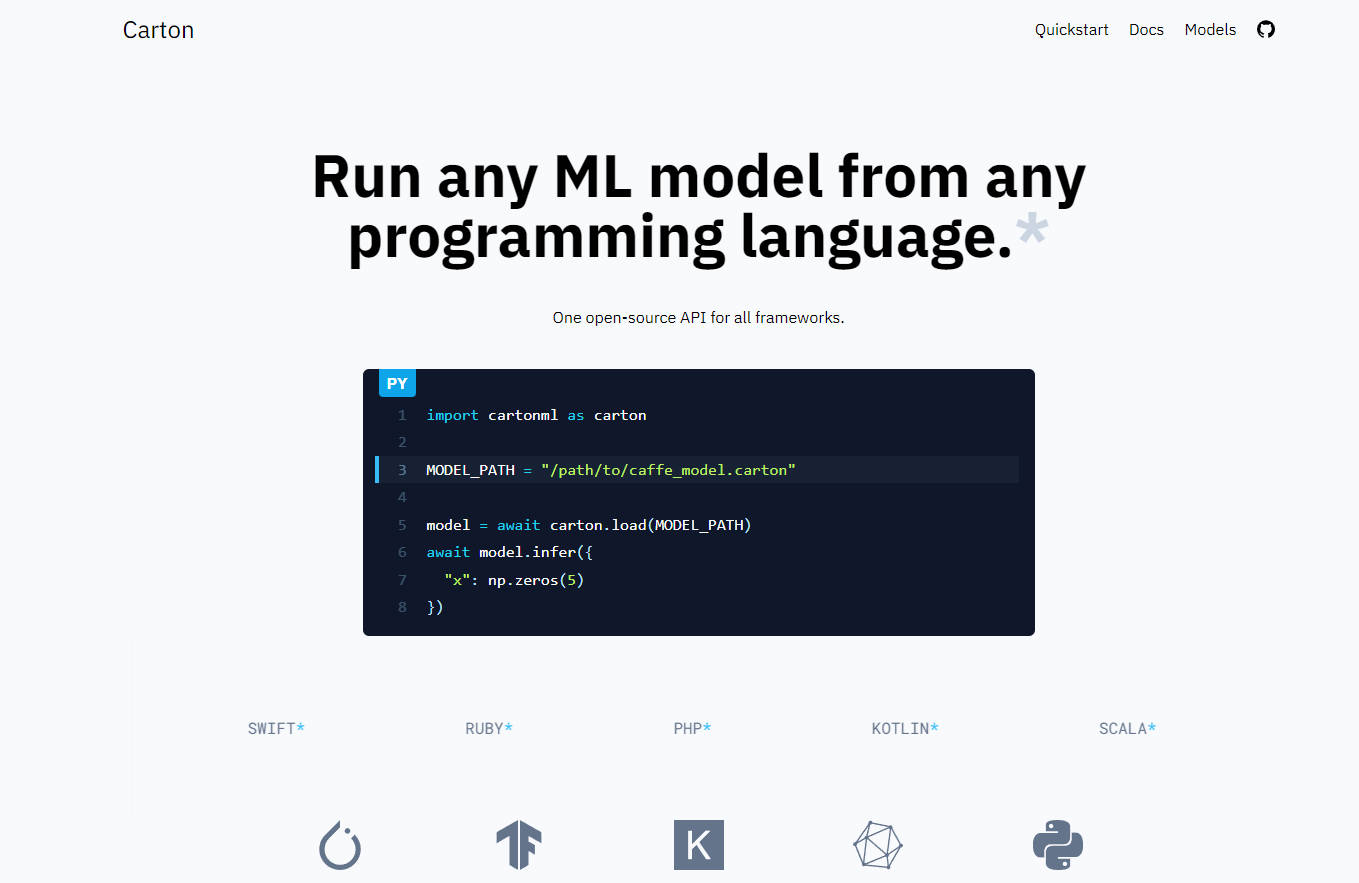

What is Carton?

Carton is a software that allows users to run machine learning (ML) models from any programming language. It decouples the inference code from specific ML frameworks, allowing users to easily keep up with cutting-edge technologies. Carton has low overhead and supports various platforms including x86_64 Linux and macOS, aarch64 Linux, aarch64 macOS, and WebAssembly.

Key Features:

- Decouples ML framework implementation: Carton allows users to run ML models without being tied to specific frameworks such as Torch or TensorFlow.

- Low overhead: Preliminary benchmarks show an overhead of less than 100 microseconds per inference call.

- Platform support: Carton currently supports x86_64 Linux and macOS, aarch64 Linux, aarch64 macOS, and WebAssembly.

- Packaging without modification: A carton is the output of the packing step which contains the original model and metadata. It does not modify the original model, avoiding error-prone conversion steps.

- Custom ops support: Carton uses the underlying framework (e.g., PyTorch) for executing models, making it easy to use custom operations like TensorRT without changes.

- Future ONNX support: Although Carton wraps models instead of converting them like ONNX does, there are plans to support ONNX models within Carton in order to enable interesting use cases such as running models in-browser with WASM.

Use Cases:

1. Fast experimentation: By decoupling inference code from specific frameworks and reducing conversion steps, Carton enables faster experimentation with different ML models.

2. Deployment flexibility: With its platform support for various operating systems including Linux and macOS on different architectures like x86_64 and aarch64, Carton provides flexibility in deploying ML models across different environments.

3. Custom operation integration: The ability to use custom operations like TensorRT makes it easier for developers to optimize their ML workflows according to their specific requirements.

4. In-browser ML: With future support for ONNX models and WebAssembly, Carton can be used to run ML models directly in web browsers, opening up possibilities for browser-based applications that require machine learning capabilities.