What is FriendliAI?

Building and serving generative AI models shouldn’t break the bank or slow you down. That’s where FriendliAIcomes in. Whether you’re a startup, enterprise, or AI developer, FriendliAI offers cutting-edge generative AI infrastructure that accelerates inference, slashes GPU costs, and simplifies deployment. With Friendli Engineat its core, this platform delivers groundbreaking performance, enabling you to focus on innovation while it handles the heavy lifting.

Key Features

🚀 Accelerate AI Inference

Friendli Engine boosts generative AI performance, delivering 10.7x higher throughput and 6.2x lower latencycompared to competitors. This means faster responses for your users and smoother operations for your team.

💸 Save Up to 90% on GPU Costs

By optimizing GPU usage with techniques like Iteration Batching and Native Quantization, FriendliAI reduces the number of GPUs needed by 6x, helping you cut costs without compromising performance.

🔧 Serve Custom Models Effortlessly

Upload your own models or import from Hugging Face or Weights & Biases. Fine-tune them with Parameter-Efficient Fine-Tuning (PEFT)and deploy with Multi-LoRA serving—all in one platform.

🌐 Seamless Real-Time RAG Integration

Keep your AI agents up-to-date with Retrieval-Augmented Generation (RAG). Integrate predefined tools or add your own to build powerful, context-aware AI systems.

🔒 Maximum Security and Compliance

Whether you choose Friendli’s cloud or your own infrastructure, your data stays secure. Built-in autoscaling ensures optimal performance during peak times, while dedicated GPU resources guarantee consistent reliability.

Use Cases

Personalized Chatbots

NextDay AI used Friendli Container to cut GPU costs by 50%while processing 0.5 trillion tokens monthly. Their chatbot is now ranked among the top 20 generative AI products by Andreessen Horowitz (a16z).Enterprise-Grade AI Agents

SK Telecom, South Korea’s leading telecom provider, relies on Friendli Dedicated Endpoints to serve LLMs with 5x higher throughput and 3x cost savings, all while maintaining strict SLAs.Real-Time AI Applications

ScatterLab’s “Zeta” app, a top 10 mobile app for South Korean teens, uses Friendli Container to handle 17x more parameters in real-time, delivering fast and reliable responses.

Why Choose FriendliAI?

Proven Performance:Achieve 10x faster token generationand 5x faster initial response times compared to competitors like vLLM.

Flexible Deployment:Choose from Dedicated Endpoints, Container, or Serverless Endpoints to match your needs.

Enterprise-Ready:Enjoy guaranteed SLAs, autoscaling, and dedicated GPU resources for mission-critical applications.

Cost Efficiency:Reduce GPU costs by up to 90% while maintaining top-tier performance.

FAQ

Q: Can I use my own models with FriendliAI?

A: Absolutely! You can upload custom models or import them from Hugging Face or Weights & Biases. Fine-tune them with PEFT and deploy with Multi-LoRA serving.

Q: How does FriendliAI reduce GPU costs?

A: Through advanced optimizations like Iteration Batching, Native Quantization, and intelligent autoscaling, FriendliAI reduces the number of GPUs needed by up to 6x, saving you up to 90% in costs.

Q: Is FriendliAI secure?

A: Yes. Whether you use Friendli’s cloud or your own infrastructure, robust security measures ensure your data stays protected.

Q: What models does FriendliAI support?

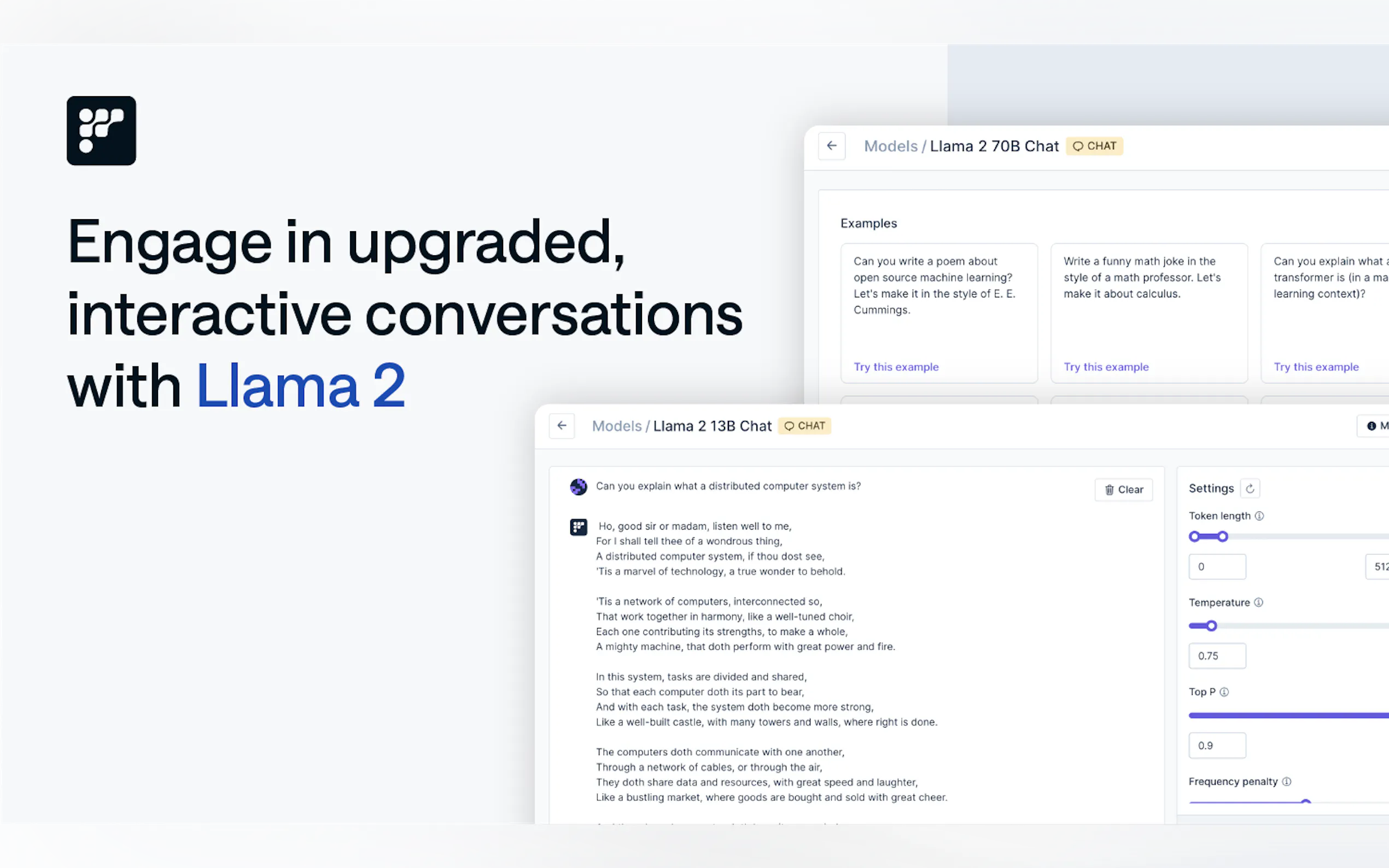

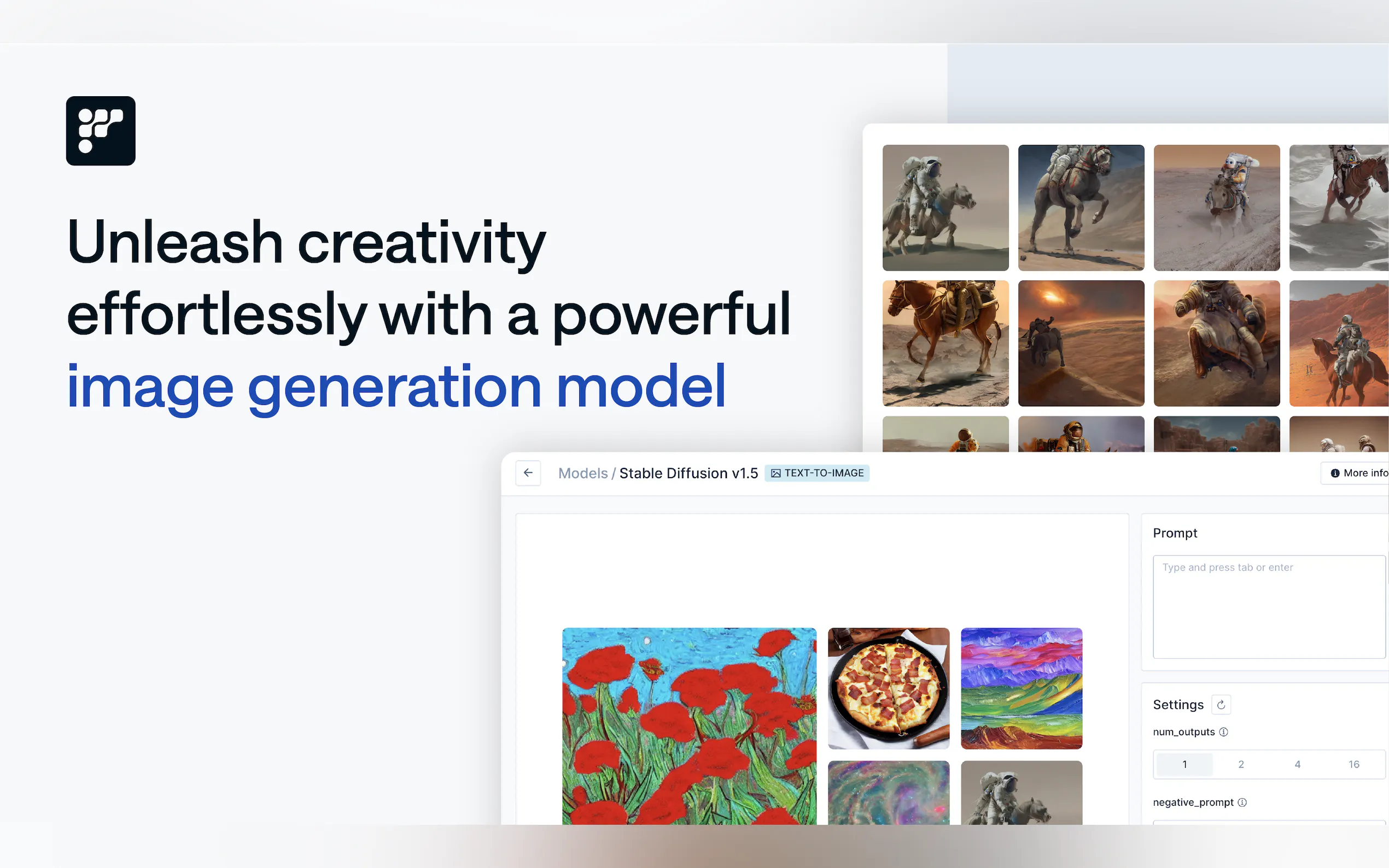

A: FriendliAI supports 100+ models, including Llama 3.1, Mixtral, Gemma, and more. It also supports custom models tailored to your needs.

Ready to Transform Your AI Workflow?

FriendliAI is more than just a platform—it’s your partner in building and scaling generative AI applications. Whether you’re creating chatbots, AI agents, or real-time RAG systems, FriendliAI delivers unmatched performance, cost savings, and ease of use.

👉 Get started for free and see how FriendliAI can supercharge your generative AI efforts today!

More information on FriendliAI

Top 5 Countries

Traffic Sources

FriendliAI Alternatives

Load more Alternatives-

Build gen AI models with Together AI. Benefit from the fastest and most cost-efficient tools and infra. Collaborate with our expert AI team that’s dedicated to your success.

-

Use a state-of-the-art, open-source model or fine-tune and deploy your own at no additional cost, with Fireworks.ai.

-

Personalize your chat experience with multiple AI models, manage & collaborate with your team, and create your own LLM agents without dev team. The best part is that you only need to pay based on your usage; no subscription is needed!

-

-

Privately tune and deploy open models using reinforcement learning to achieve frontier performance.