What is Gpt-oss?

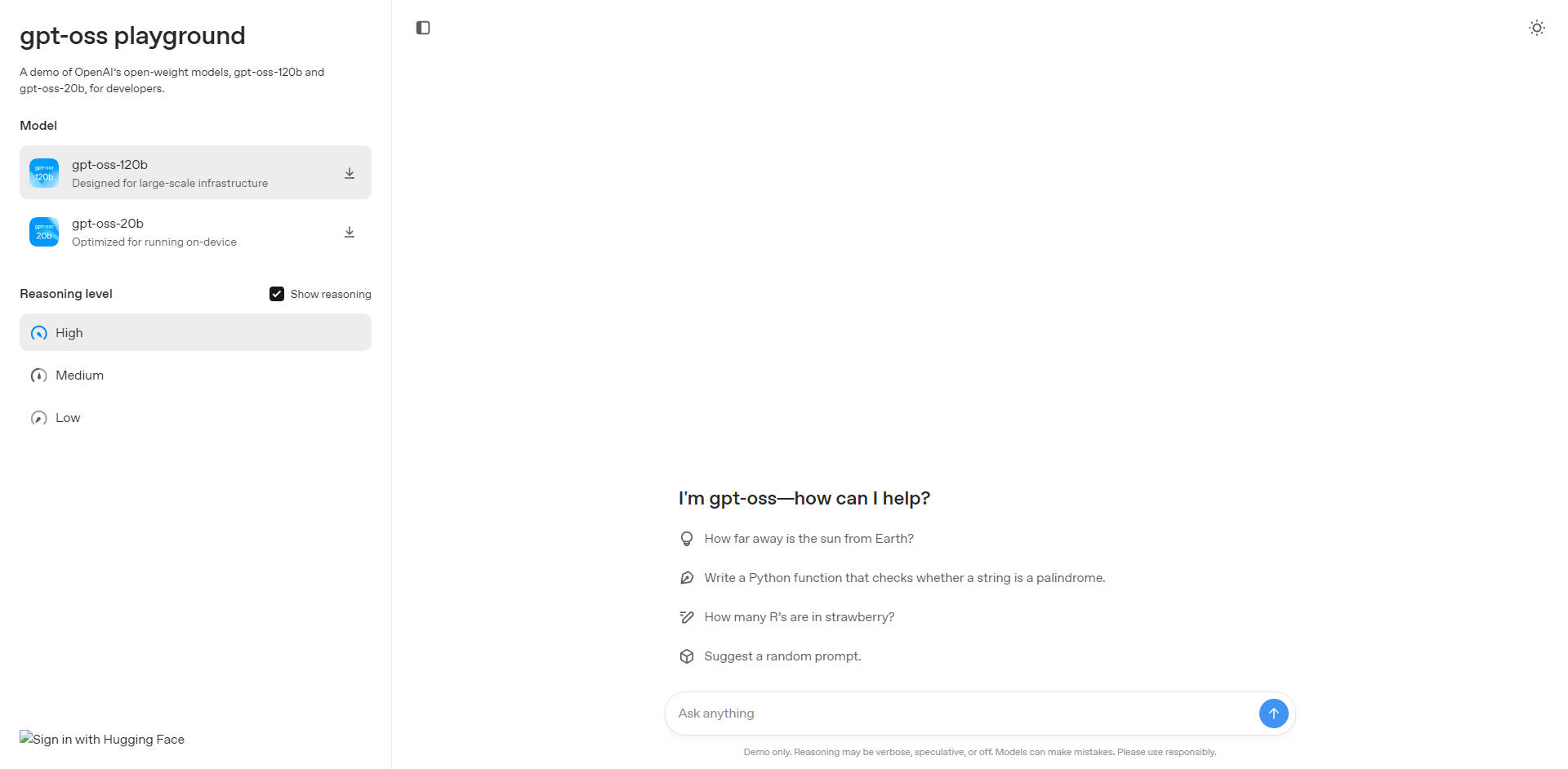

openai introducing gpt-oss-120b and gpt-oss-20b, two powerful and highly efficient open-source language models. Built for developers, researchers, and enterprises, they solve a critical challenge: accessing state-of-the-art AI performance without being confined to proprietary APIs. Licensed under the flexible Apache 2.0, you can now run, customize, and fine-tune these models directly on your own infrastructure, from high-end servers to consumer-grade hardware.

Key Features

🚀 Dual Models for Scalable Performance Choose the model that fits your exact needs. gpt-oss-120b delivers reasoning performance on par with leading proprietary models and runs efficiently on a single 80GB GPU. For maximum accessibility, gpt-oss-20b offers impressive capabilities on edge devices and hardware with as little as 16GB of memory, making it ideal for local and on-device applications.

🤖 Superior Tool Use and Reasoning These models are engineered for complex, agent-based workflows. They excel at instruction following, using tools like web search and Python code execution, and employing Chain-of-Thought (CoT) reasoning to solve problems. This makes them a robust foundation for building sophisticated AI agents that can interact with external systems to complete tasks.

⚙️ Optimized for Efficiency with MoE gpt-oss models utilize a Mixture-of-Experts (MoE) architecture, a key differentiator from traditional dense models. By activating only a fraction of their total parameters for any given task (5.1B for the 120b model, 3.6B for the 20b), they drastically reduce computational costs and memory requirements, enabling their exceptional performance-to-hardware ratio.

🛡️ Built-in Advanced Safety Standards Security is a core principle of this release. The models have undergone extensive safety training, including data filtering and alignment techniques to refuse harmful requests. They are held to the same internal safety benchmarks as OpenAI's most advanced proprietary models, providing a trustworthy foundation for your applications.

🔧 Fully Open and Customizable With the Apache 2.0 license, you have the freedom to innovate. You can fine-tune the models on your private datasets for specialized tasks and inspect their complete, unsupervised Chain-of-Thought process. This transparency is invaluable for research, debugging, and monitoring model behavior.

Unique Advantages

The gpt-oss models are not just another open-source release. They are designed to provide a distinct combination of performance, efficiency, and transparency that sets them apart.

Performance That Rivals Proprietary Systems: While many open models compromise on capability, gpt-oss-120b delivers results competitive with, and in some cases exceeding, leading proprietary models like OpenAI's o4-mini. On challenging benchmarks for health-related queries (HealthBench) and competition math (AIME), gpt-oss models outperform even top-tier systems like o1 and GPT-4o.

Unprecedented Efficiency for the Power: Unlike dense models that are computationally expensive, gpt-oss leverages its MoE architecture to deliver elite performance on accessible hardware. The ability to run a 120-billion-parameter-class model on a single GPU or a 20-billion-parameter model on a laptop was previously out of reach for most developers.

The Only OpenAI Models with Unsupervised CoT: While our API models have aligned reasoning, gpt-oss models are intentionally released with an unsupervised Chain-of-Thought (CoT). This unique approach provides an unfiltered view into the model's reasoning process, offering an essential tool for researchers and developers focused on safety, alignment, and interpretability.

A Pedigree of State-of-the-Art Training: These models are a direct result of the same advanced post-training and reinforcement learning techniques used to create OpenAI's most capable proprietary inference models. You are getting the benefits of a world-class training methodology in a fully open and adaptable package.

Conclusion:

The gpt-oss-120b and gpt-oss-20b models represent a significant step forward in democratizing access to powerful AI. They bridge the gap between the flexibility of open-source and the raw capability of state-of-the-art proprietary systems. Whether you're a solo developer prototyping on a laptop, a researcher pushing the boundaries of AI safety, or an enterprise deploying solutions on-premise, these models provide the power, efficiency, and control you need to build the next generation of AI applications.

Explore how gpt-oss can accelerate your work today!

FAQ

1. What is the main difference between the gpt-oss-120b and gpt-oss-20b models? The primary difference is the trade-off between performance and hardware requirements. gpt-oss-120b is the more powerful model, designed for maximum reasoning and task-completion ability, and is optimized to run on a single 80GB GPU. gpt-oss-20b is designed for maximum efficiency and accessibility, offering strong performance on consumer-grade hardware with as little as 16GB of memory, making it perfect for on-device or edge computing scenarios.

2. How do these open models compare to using OpenAI's APIs? gpt-oss models are ideal when you require full control, customization, or need to run models in a local or private environment for data security. You can fine-tune them extensively and inspect their inner workings. Our API models remain the best choice if you need a fully managed service, multimodal capabilities (like vision), built-in tool integrations, and seamless platform updates without managing infrastructure.

3. What does "unsupervised Chain-of-Thought (CoT)" mean for me as a developer? It means you have access to the model's raw, step-by-step "thinking" process before it produces a final answer. This is incredibly valuable for debugging, understanding model behavior, and conducting safety research. However, because it is unfiltered, the CoT should not be shown to end-users, as it may contain inaccuracies or content that doesn't meet final output safety standards.

More information on Gpt-oss

Gpt-oss Alternatives

Load more Alternatives-

OLMo 2 32B: Open-source LLM rivals GPT-3.5! Free code, data & weights. Research, customize, & build smarter AI.

-

DeepCoder: 64K context code AI. Open-source 14B model beats expectations! Long context, RL training, top performance.

-

MonsterGPT: Fine-tune & deploy custom AI models via chat. Simplify complex LLM & AI tasks. Access 60+ open-source models easily.

-

GPT-NeoX-20B is a 20 billion parameter autoregressive language model trained on the Pile using the GPT-NeoX library.

-

Secure, shared GenAI workspace for teams. Unify top AI models, project knowledge & conversations. Collaborate confidently, build together, and boost productivity—all in one place.