What is LiveBench?

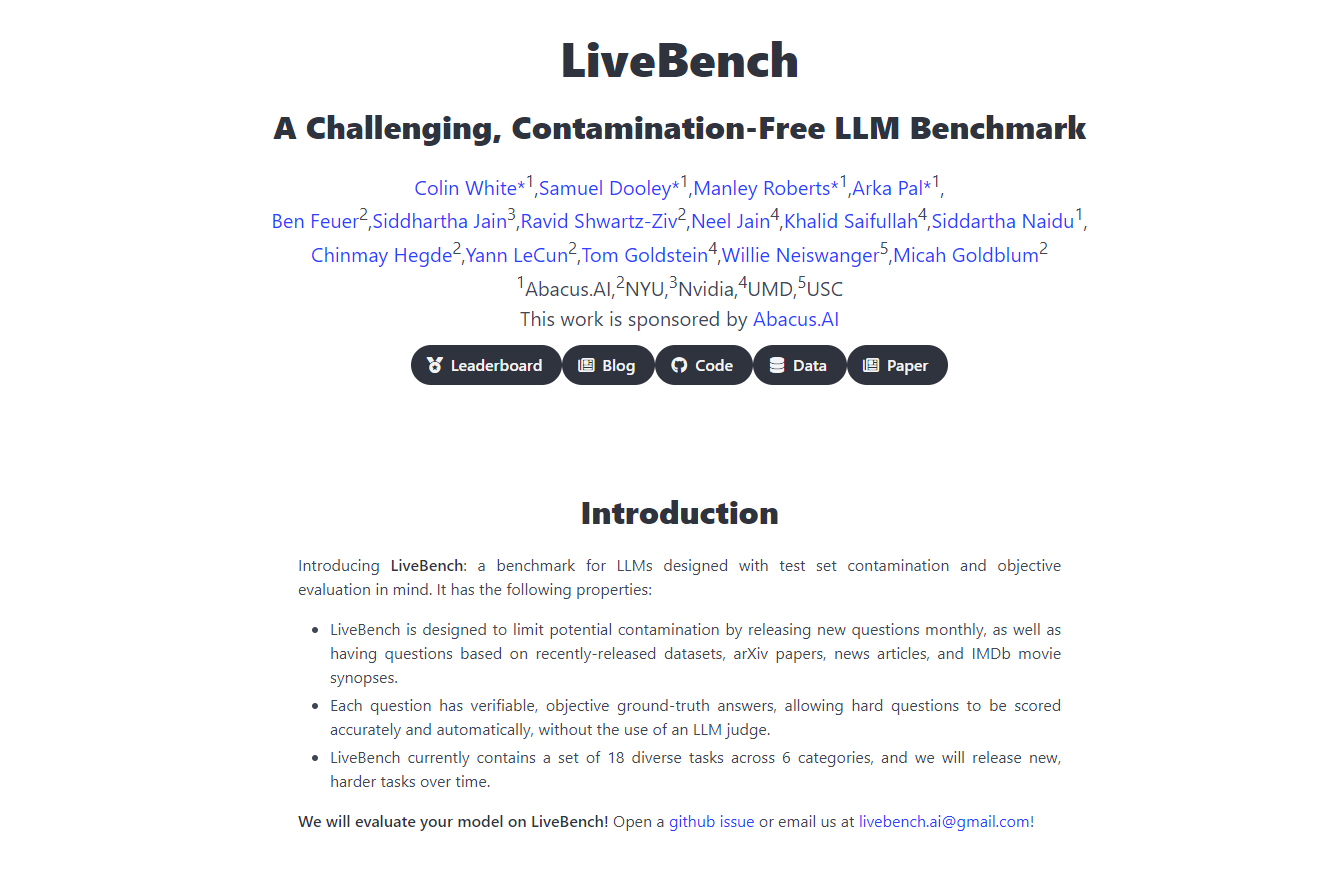

Introducing LiveBench AI, a revolutionary benchmarking platform crafted in collaboration with Yann LeCunn and his team, designed to challenge and evaluate large language models (LLMs) like never before. This continuously updated benchmark introduces new challenges that can't be simply memorized by models, ensuring accurate and unbiased evaluations. It assesses LLMs across various dimensions including reasoning, programming, writing, and data analysis, providing a robust, fair, and comprehensive assessment framework that's crucial for AI development and deployment.

Key Features

Continuous Updates: LiveBench introduces new questions monthly, based on recent datasets, arXiv papers, news articles, and IMDb summaries, preventing memorization and ensuring ongoing evaluation of LLM capabilities.

Objective Scoring: Each question has a verifiable, objective answer, allowing for precise, automated scoring without the need for LLM judges, thus maintaining fairness in评估.

Diverse Task Range: Currently encompassing 18 different tasks across 6 categories, with new, more difficult tasks released over time to keep the benchmark challenging and relevant.

Anti-Contamination Design: LiveBench is structured to include only questions with clear, objective answers, minimizing bias and ensuring the integrity of the assessment.

Avoiding Evaluation Traps: The platform is developed to sidestep the pitfalls of traditional LLM evaluation methods, such as biases in hard question answers, by focusing on objective, verifiable correctness.

Use Cases

AI Research and Development: Researchers can use LiveBench to accurately gauge the performance of their LLMs against a dynamic set of challenges, driving improvements and innovations in AI.

Tech Company Benchmarking: Technology companies can employ LiveBench to compare the effectiveness of different LLMs, guiding decisions on which models to integrate into their products and services.

Educational Assessment: Educators can utilize the platform to teach and test students on the capabilities and limitations of LLMs, providing practical insights into AI assessment and development.

Conclusion

LiveBench AI stands at the forefront of AI benchmarking, offering a comprehensive, fair, and continuously evolving assessment tool for large language models. Its innovative approach ensures that LLM development is grounded in real-world challenges, leading to more robust and reliable AI technologies. Discover the true potential of AI with LiveBench AI – where the future of AI is tested and proven.

FAQs

What makes LiveBench unique compared to other AI benchmarks?Unlike other benchmarks, LiveBench uses a dynamic set of challenges with clear, objective answers, updated monthly to prevent memorization, ensuring a continuous and accurate assessment of LLM capabilities.

How does LiveBench ensure the fairness of its evaluations?LiveBench avoids biases and fairness issues by focusing on questions with verifiable, objective answers and by not relying on LLM judges for scoring, which maintains an unbiased评估process.

Can LiveBench be used for educational purposes?Absolutely. LiveBench provides a practical, real-world dataset and challenges that can be used by educators to teach and test students on AI assessment, making it an invaluable educational resource.

More information on LiveBench

Top 5 Countries

Traffic Sources

LiveBench Alternatives

Load more Alternatives-

WildBench is an advanced benchmarking tool that evaluates LLMs on a diverse set of real-world tasks. It's essential for those looking to enhance AI performance and understand model limitations in practical scenarios.

-

BenchLLM: Evaluate LLM responses, build test suites, automate evaluations. Enhance AI-driven systems with comprehensive performance assessments.

-

Launch AI products faster with no-code LLM evaluations. Compare 180+ models, craft prompts, and test confidently.

-

Companies of all sizes use Confident AI justify why their LLM deserves to be in production.

-