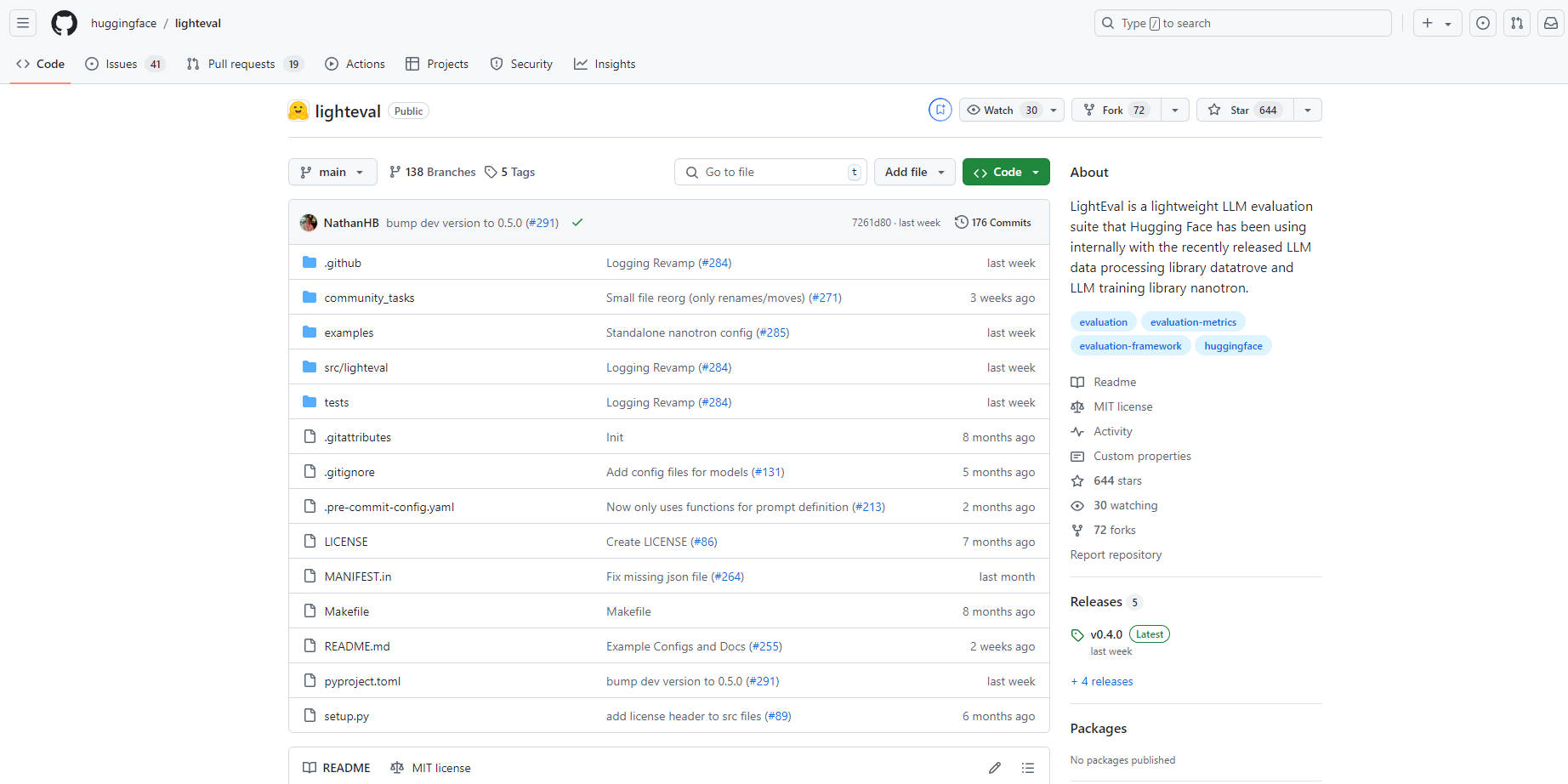

What is LightEval?

LightEval, a cutting-edge, lightweight AI evaluation tool from Hugging Face designed specifically for assessing large language models (LLMs). With its versatility to handle multiple tasks and complex configurations, LightEval operates across various hardware setups including CPUs, GPUs, and TPUs. It's accessible through a straightforward command-line interface or programmatically, allowing users to tailor tasks and evaluation settings. Integrated with other Hugging Face tools, LightEval simplifies model management and sharing, making it an ideal choice for enterprises and researchers alike. Open-sourced and available on GitHub, this tool pairs perfectly with Hugging Face's datatrove and nanotron for comprehensive LLM processing and training.

Key Features:

Multi-Device Support:Evaluate models across CPUs, GPUs, and TPUs, ensuring adaptability to diverse hardware landscapes and corporate demands.

User-Friendly Interface:Even users with minimal technical expertise can easily utilize LightEval to assess models on various benchmarks or define custom tasks.

Customizable Evaluations:Tailor evaluations to specific needs, including setting model assessment configurations such as weights, pipeline parallelism, and more.

Hugging Face Ecosystem Integration:Seamlessly integrates with tools like Hugging Face Hub for effortless model management and sharing.

Complex Configuration Support:Load models using configuration files to handle intricate setups, including adapter/incremental weights or other advanced configuration options.

Pipeline Parallel Evaluation:Efficiently assesses models with over 40B parameters in 16-bit precision using pipeline parallelism to distribute model slices across multiple GPUs.

Use Cases:

Enterprise Model Testing:Corporations can easily validate the performance of LLMs on various hardware systems before deployment.

Research and Development:Researchers can experiment with different configurations and benchmarks to refine language models for specific applications.

Personalized Benchmarking:Developers can create custom tasks and benchmarks to evaluate LLMs against unique requirements.

Conclusion:

LightEval stands out as a powerful, versatile, and user-friendly evaluation suite for large language models. Its compatibility with multiple hardware devices, ease of integration, and customizable nature ensures that both enterprises and researchers can efficiently assess and refine LLMs for a wide range of applications. Experience the future of AI model evaluation with LightEval – where performance meets simplicity.