What is Megatron-LM?

Megatron is a powerful transformer developed by NVIDIA for training large language models at scale. It offers efficient model-parallel and multi-node pre-training capabilities for models like GPT, BERT, and T5. With Megatron, enterprises can overcome the challenges of building and training sophisticated natural language processing models with billions and trillions of parameters.

Key Features:

🤖 Efficient Training: Megatron enables the efficient training of language models with hundreds of billions of parameters using both model and data parallelism.

🌐 Model-Parallelism: It supports tensor, sequence, and pipeline model-parallelism, allowing for the scaling of models across multiple GPUs and nodes.

💡 Versatile Pre-Training: Megatron facilitates pre-training of various transformer-based models like GPT, BERT, and T5, enabling the development of large-scale generative language models.

Use Cases:

📚 Language Modeling: Megatron is used for large-scale language model pre-training, enabling the creation of powerful models for tasks like text generation, translation, and summarization.

🗂️ Information Retrieval: It is employed in training neural retrievers for open-domain question answering, improving the accuracy and relevance of search results.

💬 Conversational Agents: Megatron powers conversational agents by enabling large-scale multi-actor generative dialog modeling, enhancing the quality and naturalness of automated conversations.

Conclusion:

Megatron is a cutting-edge AI tool developed by NVIDIA, designed to train large transformer models at scale. With its efficient training capabilities, support for model-parallelism, and versatility in pre-training various language models, Megatron empowers enterprises to build and train sophisticated natural language processing models with exceptional performance and accuracy. Whether it's language modeling, information retrieval, or conversational agents, Megatron is a valuable asset for AI researchers and developers.

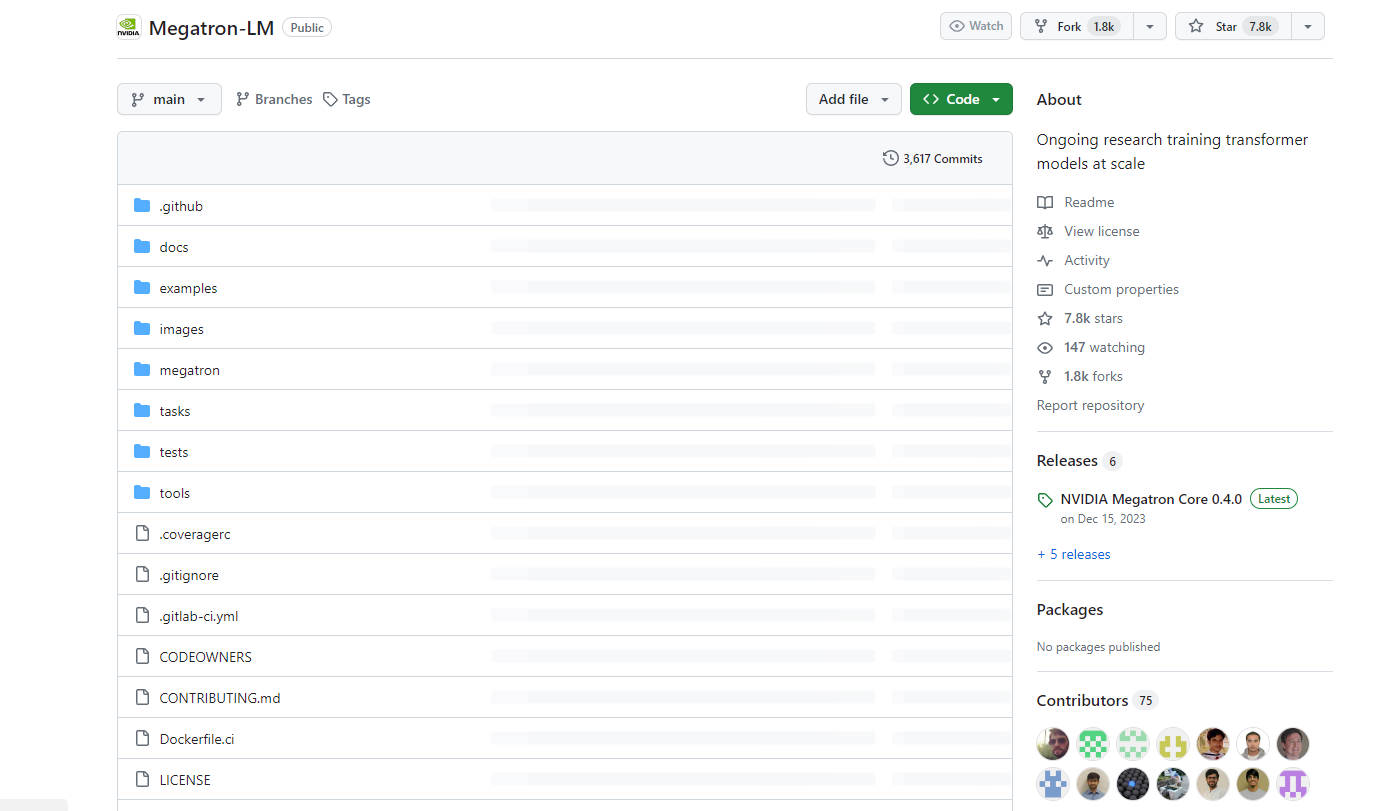

More information on Megatron-LM

Megatron-LM Alternatives

Load more Alternatives-

KTransformers, an open - source project by Tsinghua's KVCache.AI team and QuJing Tech, optimizes large - language model inference. It reduces hardware thresholds, runs 671B - parameter models on 24GB - VRAM single - GPUs, boosts inference speed (up to 286 tokens/s pre - processing, 14 tokens/s generation), and is suitable for personal, enterprise, and academic use.

-

Transformer Lab: An open - source platform for building, tuning, and running LLMs locally without coding. Download 100s of models, finetune across hardware, chat, evaluate, and more.

-

MonsterGPT: Fine-tune & deploy custom AI models via chat. Simplify complex LLM & AI tasks. Access 60+ open-source models easily.

-

-

Nemotron-4 340B, a family of models optimized for NVIDIA NeMo and NVIDIA TensorRT-LLM, includes cutting-edge instruct and reward models, and a dataset for generative AI training.