What is BERT?

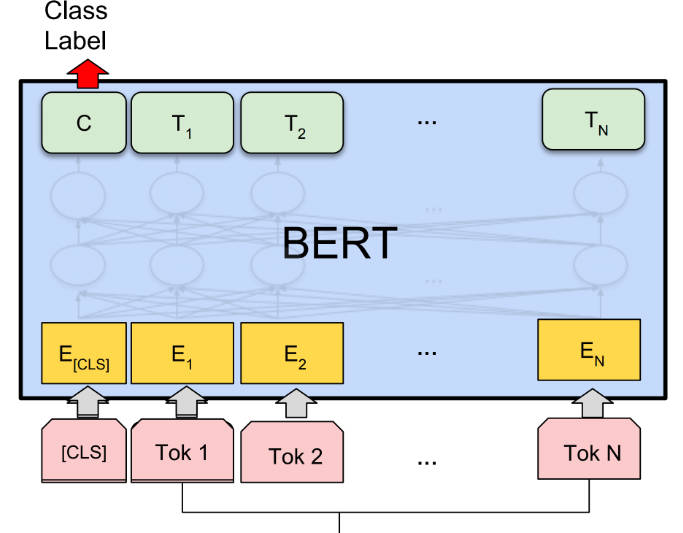

BERT (Bidirectional Encoder Representations from Transformers) is a pre-training language representation method that achieves state-of-the-art results on various Natural Language Processing (NLP) tasks. It is unsupervised and deeply bidirectional, allowing for better context understanding. BERT can be fine-tuned for specific NLP tasks, making it versatile and effective.

Key Features:

🎯 Pre-training Language Representations: BERT pre-trains a general-purpose "language understanding" model on a large text corpus, enabling it to capture contextual relationships between words.

🎯 Bidirectional and Deeply Contextual: Unlike previous models, BERT considers both left and right context when representing a word, resulting in more accurate and nuanced representations.

🎯 Fine-tuning for Specific Tasks: BERT can be fine-tuned for specific NLP tasks, such as question answering, sentiment analysis, and named entity recognition, with minimal task-specific modifications.

Use Cases:

📚 Question Answering: BERT can accurately answer questions based on a given context, making it valuable for applications like chatbots and virtual assistants.

📝 Sentiment Analysis: BERT can analyze the sentiment of a given text, helping businesses understand customer feedback and sentiment trends.

🌐 Named Entity Recognition: BERT can identify and classify named entities in text, aiding in tasks like information extraction and data mining.

Conclusion:

BERT is a powerful AI tool for NLP tasks, offering pre-trained language representations and the ability to fine-tune for specific applications. With its bidirectional and contextual understanding, BERT achieves state-of-the-art results on various tasks. Its versatility and accuracy make it a valuable asset for researchers, developers, and businesses seeking to leverage NLP technology.

More information on BERT

BERT Alternatives

Load more Alternatives-

-

-

Enhance your NLP capabilities with Baichuan-7B - a groundbreaking model that excels in language processing and text generation. Discover its bilingual capabilities, versatile applications, and impressive performance. Shape the future of human-computer communication with Baichuan-7B.

-

-

Jina ColBERT v2 supports 89 languages with superior retrieval performance, user-controlled output dimensions, and 8192 token-length.