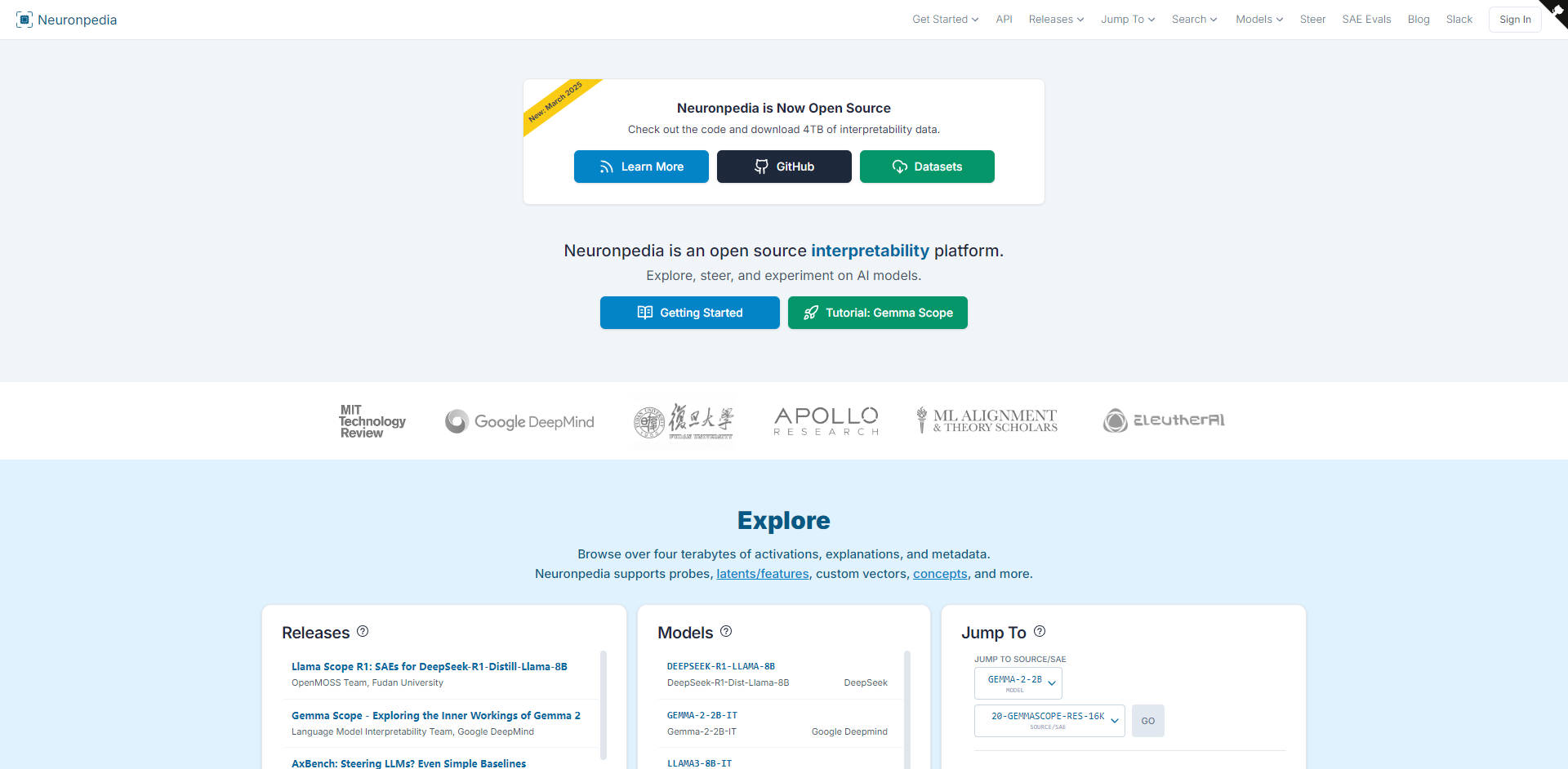

What is Neuronpedia?

Understanding what happens inside complex AI models is one of the most significant challenges in the field today. As models grow larger and more capable, peering into the "black box" becomes crucial for safety, alignment, and advancing AI science. Neuronpedia provides an open-source platform specifically designed to accelerate your mechanistic interpretability research, offering the data, tools, and collaborative environment you need to make breakthroughs. We handle the infrastructure – visualizations, tooling, scaling, and hosting – so you can focus purely on the research.

Key Features

🔍 Explore Vast Datasets: Access and analyze over four terabytes of pre-computed data, including neuron activations, feature explanations (like those generated by Sparse Autoencoders - SAEs), and associated metadata across various models. The platform supports diverse interpretability methods, including probes, latents/features, concepts, and custom vectors.

🧭 Steer Model Behavior: Experiment directly with model internals by modifying activations during inference. Use identified latents/features or custom vectors to influence model outputs in instruct (chat) and reasoning models. Fine-tune steering parameters like temperature, strength, and seed for controlled experiments.

🔎 Advanced Search Capabilities: Sift through over 50 million latents, features, and vectors efficiently. Search semantically using natural language descriptions or run custom text prompts through models via inference to pinpoint the internal components that activate most strongly.

🔬 Inspect Neural Components: Dive deep into individual probes, latents, or features. Examine top activating dataset examples, analyze effects on output logits, visualize activation density, and perform live inference testing directly within the interface. Create shareable lists or embed dashboards for collaboration.

💻 Comprehensive API & Libraries: Integrate Neuronpedia's capabilities directly into your research workflows. Access all platform functionalities, including data exploration, steering, and search, programmatically via a well-documented API (with OpenAPI spec) and convenient Python/TypeScript libraries.

🌐 Open Source Foundation: Build upon a transparent and community-driven platform. The core Neuronpedia codebase and extensive datasets are available on GitHub, encouraging contribution, verification, and extension by the research community.

Use Cases

Mapping Concepts within Models: Imagine you're researching how a model like Llama 3.1 represents abstract concepts like "optimism" or "Python code." You could use Neuronpedia's Search feature with semantic descriptions or relevant text prompts to identify potentially related features/latents. Then, use the Inspect tool to analyze their top activations and downstream effects, validating whether they consistently encode the target concept.

Validating Causal Interventions: After identifying a feature that seems to represent a specific safety concern (e.g., generating harmful content), you can use the Steer functionality. By actively suppressing or amplifying this feature's activation during inference on relevant prompts, you can test your hypothesis about its causal role in the model's behavior and potentially develop methods for mitigating related risks.

Comparative Analysis Across Architectures: Studying how different models (e.g., Gemma-2 vs. GPT2-Small) represent similar information? Use the Explore and Inspect tools to browse and compare activations or learned features (like SAEs) across equivalent layers or concepts in both models, shedding light on architectural differences and representation strategies.

Conclusion

Neuronpedia serves as a foundational resource for the AI interpretability community. By providing large-scale datasets, powerful interactive tools, and programmatic access within an open-source framework, it aims to significantly lower the barrier to entry and accelerate progress in understanding neural networks. Whether you're exploring existing models, developing new interpretability techniques, or experimenting with model control, Neuronpedia offers the infrastructure to support your work.

More information on Neuronpedia

Top 5 Countries

Traffic Sources

Neuronpedia Alternatives

Neuronpedia Alternatives-

NetMind: Your unified AI platform. Build, deploy & scale with diverse models, powerful GPUs & cost-efficient tools.

-

Neuralhub: Simplify AI development. Create, experiment, and innovate with neural networks. Collaborate, learn, and access resources in one platform.

-

Tersa is an open source canvas for building AI workflows. Drag, drop connect and run nodes to build your own workflows powered by various industry-leading AI models.

-

AI-powered research for life science. Nextnet accelerates discovery with evidence-backed answers & connected data. Explore deeper insights!

-

Mnemosphere: Elevate your AI productivity. Access frontier models, multi-model critiques, mindmaps & deep research tools for elite performance.