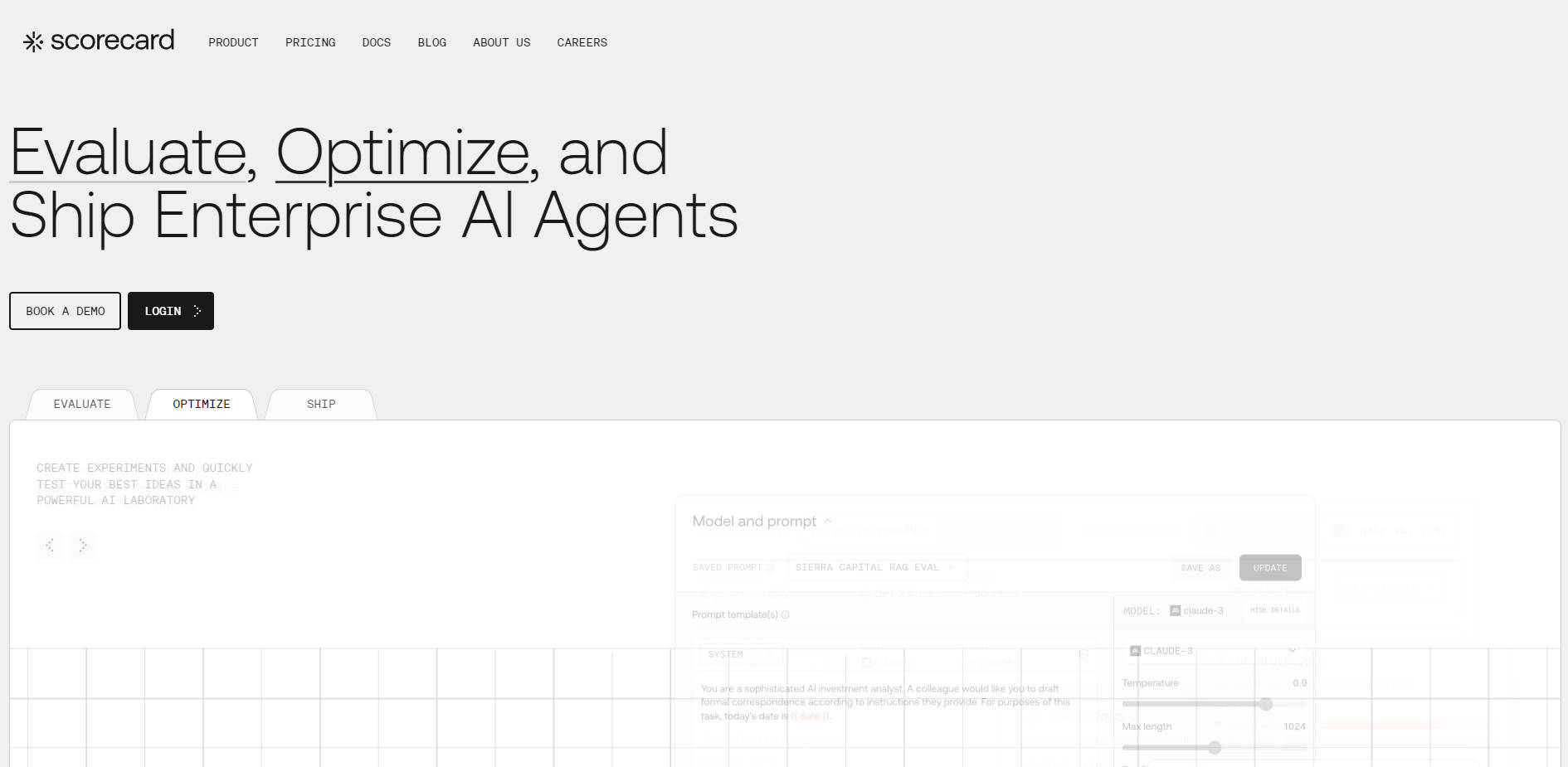

What is Scorecard?

Scorecard is the dedicated AI evaluation platform designed to help forward-thinking teams build and ship dependable AI products. It addresses the critical challenge of AI reliability by providing systematic infrastructure to test complex agents, validate performance, and prevent costly regressions before they impact users. This platform brings essential clarity and structure to AI performance, empowering AI engineers, product managers, and QA teams to collaborate and deliver predictable AI experiences.

Key Features

Scorecard provides the comprehensive tools necessary to standardize AI quality assurance, moving your team beyond manual "vibe checks" toward data-driven deployment confidence.

💡 Continuous Evaluation & Live Observability

Integrate evaluation directly into your development cycle, allowing you to monitor how models behave as you build. This live observability provides a real-time pulse on how users interact with the agent, helping you identify issues, monitor failures, and find opportunities to improve performance rapidly, ensuring a fast feedback loop.

📊 Trustworthy Metric Design and Validation

Move beyond simple output checks by leveraging Scorecard’s validated metric library, accessing industry benchmarks, or customizing proven metrics. You can stress-test and validate custom metrics before trusting them, using human scoring as the ground truth to guarantee accuracy and ensure you are tracking what truly matters for your business outcomes.

⚙️ Unified Prompt Management and Versioning

Establish a single source of truth for all production prompts by storing, tracking, and managing them in one centralized location. Use built-in version control to effortlessly compare prompt changes over time, track the best-performing iterations, and maintain a clear history of what works for confident prompt deployment to production.

🔄 Convert Production Failures to Test Cases

Don't let real-world issues slip away. Scorecard enables you to capture actual production failures and instantly convert them into reusable, structured test cases. This allows you to rapidly generate training examples for regression testing and fine-tuning, ensuring that critical issues are addressed and prevented from resurfacing in future deployments.

🧠 Comprehensive Agentic System Testing

Scorecard supports the full spectrum of modern AI agents, including multi-turn conversations, tool-calling agents, RAG pipelines, and complex multi-step workflows. You can test complete agent configurations (including prompts, tools, and settings) using automated user personas in multi-turn simulations, guaranteeing robustness across realistic user flows.

Use Cases

Scorecard integrates seamlessly into your workflow to solve common reliability and quality challenges across the AI product lifecycle.

1. Validating Mission-Critical Launches

Before launching a new feature or model update, run structured A/B comparisons between the current and proposed systems. Utilize the human labeling feature to engage subject matter experts and product managers to provide ground truth validation, ensuring the new AI behavior aligns perfectly with user expectations and compliance requirements.

2. Automating Regression Prevention

Integrate Scorecard evaluations directly into your CI/CD pipelines. This automated workflow triggers alerts when performance drops below defined thresholds, effectively catching regressions early. By systematically running comprehensive test suites—including those generated from past production failures—you can deploy new code and models with guaranteed confidence.

3. Optimizing Complex Agent Workflows

For agents handling sophisticated, multi-step tasks (like complex reasoning or tool-calling), use the Scorecard Playground to rapidly prototype and compare different models and prompt chains side-by-side using actual requests. Capture detailed latency metrics (end-to-end, model inference, network) to identify performance bottlenecks and optimize the agent's efficiency before deployment.

Unique Advantages

Scorecard is engineered to provide the systematic infrastructure and cross-functional visibility essential for engineering reliable AI at scale.

Systematic AI Evaluation Infrastructure: We provide the necessary infrastructure to run AI evals systematically, replacing manual checks with standardized processes. This allows AI engineers to focus on development while the platform validates improvements and prevents regressions automatically.

Human-Centric and Cross-Functional Design: Scorecard is designed to unite product managers, subject matter experts, and developers. Non-technical stakeholders can easily contribute domain expertise to collaboratively define quality metrics and validate outcomes, ensuring the AI product meets both technical requirements and user expectations.

Best-in-Class Developer Experience: Integration is designed for speed and ease. With comprehensive SDKs for Python and JavaScript/TypeScript, along with a robust REST API, you can integrate Scorecard into your production deployments in minutes, establishing a fast feedback loop immediately.

Conclusion

Scorecard gives your team the structure, clarity, and confidence needed to build and ship truly reliable AI products. By converting real-world performance into actionable data and integrating evaluation throughout the development cycle, you can ensure predictable AI experiences that continuously improve.