Best AutoArena Alternatives in 2025

-

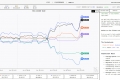

Compare and evaluate different language models with Chatbot Arena. Engage in conversations, vote, and contribute to improving AI chatbots.

-

Design Arena: The definitive, community-driven benchmark for AI design. Objectively rank models & evaluate their true design quality and taste.

-

Companies of all sizes use Confident AI justify why their LLM deserves to be in production.

-

Alpha Arena: The real-world benchmark for AI investment. Test AI models with actual capital in live financial markets to prove performance & manage risk.

-

Windows Agent Arena (WAA) is an open-source testing ground for AI agents in Windows. Empowers agents with diverse tasks, reduces evaluation time. Ideal for AI researchers and developers.

-

Free, unbiased testing for OCR & VLM models. Evaluate document parsing AI with your own files, get real-world performance insights & rankings.

-

AutoAgent: Zero-code AI agent builder. Create powerful LLM agents with natural language. Top performance, flexible, easy to use.

-

Explore LLM agent behavior in interactive language games. ChatArena helps researchers develop, evaluate, and benchmark agents with ease.

-

JudgeAI is a system for the complete automation of judicial proceedings, from filing a claim to delivering a final decision on the case.

-

Get a rapid, fair, and free resolution for your disputes with AI Judge. Present your case, let AI analyze the facts, and get fair judgment results.

-

Your premier destination for comparing AI models worldwide. Discover, evaluate, and benchmark the latest advancements in artificial intelligence across diverse applications.

-

Intuitive and powerful one-stop evaluation platform to help you iteratively optimize generative AI products. Simplify the evaluation process, overcome instability, and gain a competitive advantage.

-

Athina AI is an essential tool for developers looking to create robust, error-free LLM applications. With its advanced monitoring and error detection capabilities, Athina streamlines the development process and ensures the reliability of your applications. Perfect for any developer looking to enhance the quality of their LLM projects.

-

Create personalized AI applications easily with Automi AI. Customize algorithms, build and share applications effortlessly. Start exploring today!

-

Aguru AI offers a comprehensive solution for businesses, ensuring reliable, secure, and cost-effective AI applications with features like performance monitoring, behavior analysis, security protocols, cost optimization, and instant alerts.

-

Evaluate & improve your LLM applications with RagMetrics. Automate testing, measure performance, and optimize RAG systems for reliable results.

-

Struggling to ship reliable LLM apps? Parea AI helps AI teams evaluate, debug, & monitor your AI systems from dev to production. Ship with confidence.

-

Build next-gen LLM applications effortlessly with AutoGen. Simplify development, converse with agents and humans, and maximize LLM utility.

-

AutoGen Studio 2.0, a Microsoft's advanced AI development tool with AI Agent creation, diverse interfaces and powerful API, is for developers of all levels. Solves development inefficiency and offers comprehensive solutions.

-

Ensure reliable, safe generative AI apps. Galileo AI helps AI teams evaluate, monitor, and protect applications at scale.

-

Deepchecks: The end-to-end platform for LLM evaluation. Systematically test, compare, & monitor your AI apps from dev to production. Reduce hallucinations & ship faster.

-

Privately tune and deploy open models using reinforcement learning to achieve frontier performance.

-

Struggling with unreliable Generative AI? Future AGI is your end-to-end platform for evaluation, optimization, & real-time safety. Build trusted AI faster.

-

Independent analysis of AI models and hosting providers - choose the best model and API hosting provider for your use-case

-

LiveBench is an LLM benchmark with monthly new questions from diverse sources and objective answers for accurate scoring, currently featuring 18 tasks in 6 categories and more to come.

-

besimple AI instantly generates your custom AI annotation platform. Transform raw data into high-quality training & evaluation data with AI-powered checks.

-

Debug LLMs faster with Okareo. Identify errors, monitor performance, & fine-tune for optimal results. AI development made easy.

-

Supercharge your agents with AutoGPT, an open-source toolkit. Enhance performance, customize functionality, and build smarter, more capable agents.

-

Braintrust: The end-to-end platform to develop, test & monitor reliable AI applications. Get predictable, high-quality LLM results.

-

Evaligo: Your all-in-one AI dev platform. Build, test & monitor production prompts to ship reliable AI features at scale. Prevent costly regressions.