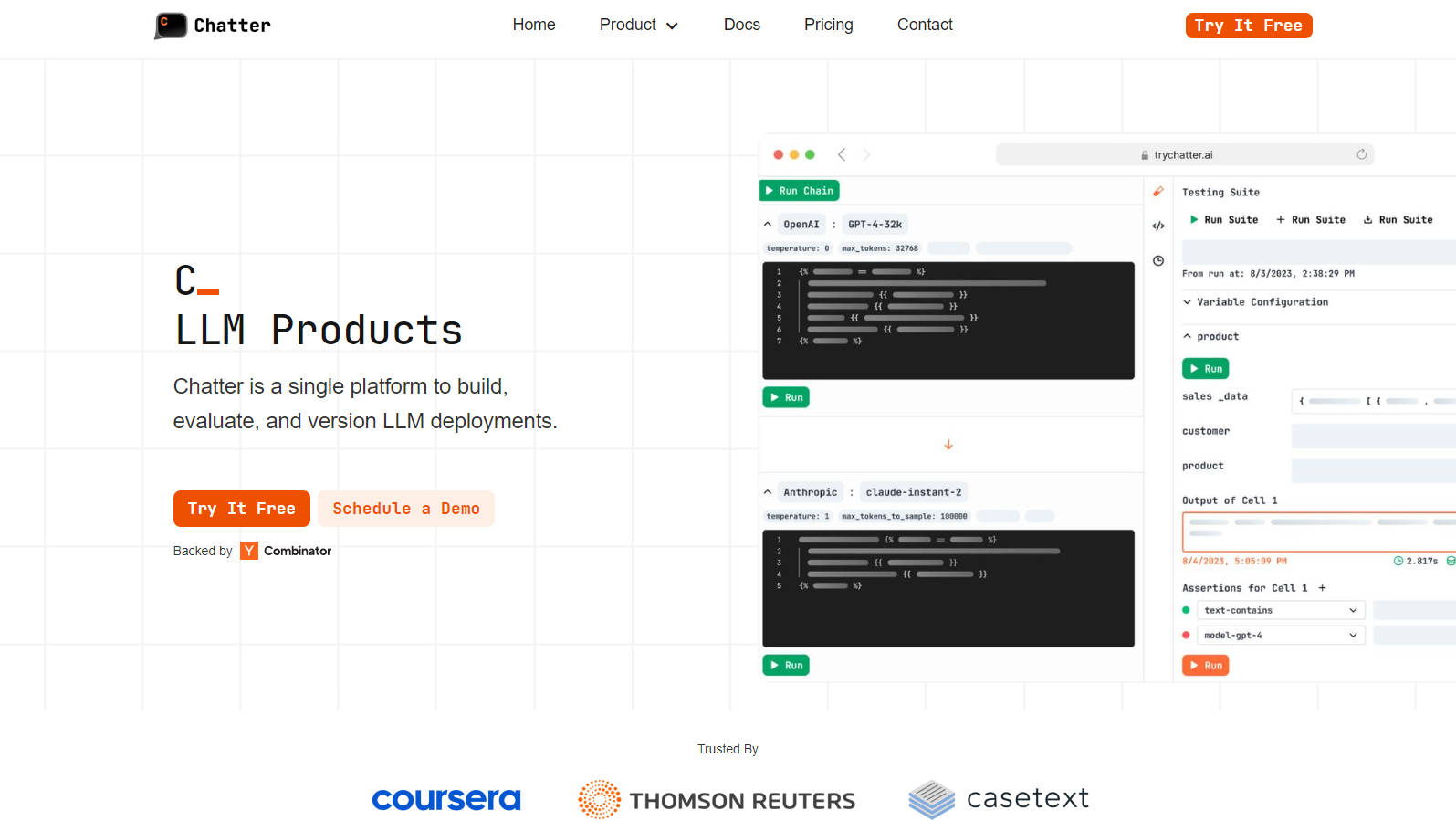

What is Chatter?

Chatter is a versatile AI tool that simplifies the deployment, testing, and management of LLM (Large Language Model) applications. It streamlines the process of creating complex chains of models, offers automated evaluations, and provides secure code separation. Chatter also supports Jinja2 templating, Retrieval Augmented Generation (RAG), API key management, analytics, and detailed observability for improved AI development.

Key Features:

1️⃣ Create Complex Chains: Build intricate chains of models with ease, including function calling, data manipulation, and more. Seamlessly integrate these chains with your code for efficient development.

2️⃣ Automated Testing: Develop and maintain testing suites effortlessly, with automatic evaluations based on various metrics such as LLM-based assessment, semantic similarity, and regex matching.

3️⃣ SDK & Code Export: Safeguard your codebase by separating iterations, ensuring maximum speed, and enhancing prompt security. Utilize the Jinja2 templating engine for intermediate data transformations.

Use Cases:

Streamlined Model Deployment: Chatter simplifies the deployment of Large Language Models, making it ideal for businesses looking to integrate AI capabilities into their applications quickly and efficiently.

Efficient Testing and Evaluation: Research and development teams can benefit from Chatter's automated testing, ensuring the accuracy and reliability of AI models, saving time and resources.

Enhanced Code Security: Organizations concerned about code security can leverage Chatter's code separation features, safeguarding their AI models while still enjoying the benefits of rapid development and testing.

Conclusion:

Chatter is a powerful AI tool that offers a comprehensive solution for building, testing, and managing LLM deployments. With features like automated testing, code separation, and complex chain creation, it accelerates AI development and ensures the reliability of AI applications. Chatter is a valuable asset for businesses and teams seeking to harness the potential of Large Language Models effectively.

More information on Chatter

Top 5 Countries

Traffic Sources

Chatter Alternatives

Chatter Alternatives-

Revolutionize interactions with language models. Comprehensive support for any model, privacy-focused chat, and easy AI sharing. YourChat has it all.

-

Multimodal chats, endless memory, and budget-friendly API to reshape how we communicate and create.

-

One AI assistant for you or your team with access to all the state-of-the-art LLMs, web search and image generation.

-

Chat with Best llms: Mixtral, Llama-3, Claude-3, Gemini 1.5 Pro, Perplexity, GPT-5, SD3 all at one place.

-

Mobile app that allows you to build and deploy an AI chatbot to thousands of users. Chat with AI.