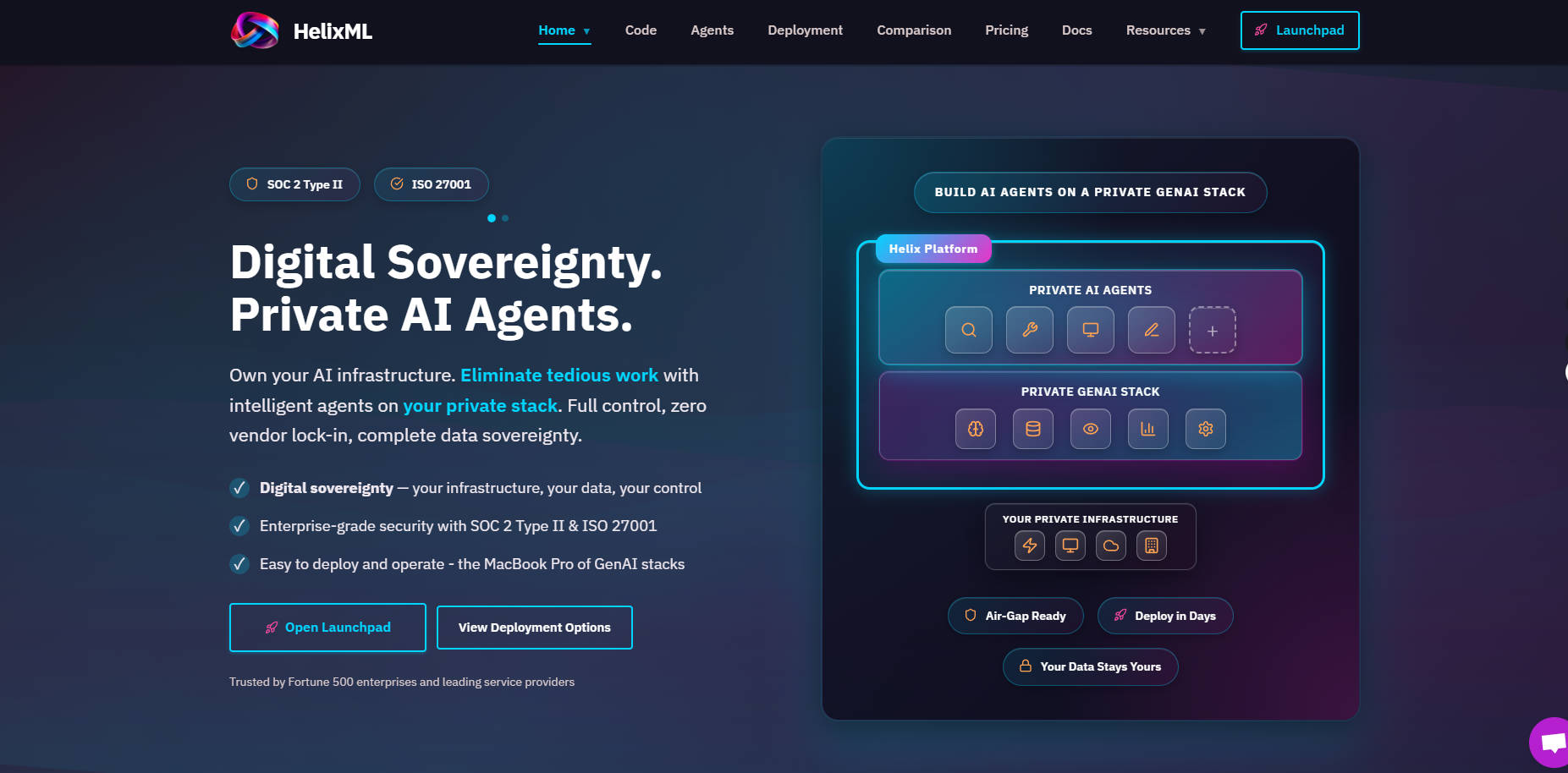

What is HelixML?

HelixML is the enterprise-grade platform for building, deploying, and managing sophisticated AI agents and applications at scale. It addresses the critical need for data security and regulatory compliance by allowing deployment within your own data center or Virtual Private Cloud (VPC), ensuring complete organizational control over your GenAI stack and sensitive data. Developers can rapidly create complex, context-aware, and multi-step reasoning applications simply by defining requirements in a declarative helix.yaml configuration file.

Key Features

HelixML delivers a robust, observable, and highly customizable infrastructure designed for professional AI development and deployment.

🤖 Advanced AI Agent Orchestration

Helix provides a session-based architecture that supports multi-step reasoning and complex tool orchestration, allowing agents to handle sophisticated tasks beyond simple chat. Agents utilize comprehensive memory management to maintain context across interactions, ensuring highly relevant and coherent conversational flows.

📚 Enterprise Knowledge Management (RAG)

Go beyond basic contextual injection. Helix includes built-in ingestion tools for corporate documents (PDFs, Word) and web scrapers for external content. It supports multiple RAG backends (including Typesense, Haystack, and PGVector) and incorporates Vision RAG support for integrating and searching through multimodal content, maximizing the depth and accuracy of agent responses.

⚙️ Intelligent Resource Scheduling

Optimize your hardware investment with an intelligent GPU scheduler designed for efficiency. This system dynamically loads and unloads models based on demand and efficiently packs them into available GPU memory, significantly improving resource utilization and reducing operational costs compared to static deployments.

🔍 Complete Tracing and Observability

Because agents often process tens of thousands of tokens per step and interact with external systems, Helix provides complete, real-time visibility into every execution step. You can inspect requests and responses to LLM providers and third-party APIs, track real-time token usage, and perform cost analysis and performance debugging directly within the Tracing Interface.

🛠️ Seamless Tool & API Integration

Empower agents with real-world capabilities through robust tool integration. Helix supports REST API integration using OpenAPI schemas, secure third-party access via OAuth token management, and advanced scripting via integrated GPTScript, allowing agents to execute complex, real-world actions across your existing enterprise ecosystem.

Use Cases

HelixML is engineered to solve complex, data-sensitive problems across the enterprise, transforming raw data into actionable intelligence and automation.

Internal Knowledge Base Analysis: Upload vast libraries of internal corporate documents, compliance guides, and technical specifications. Deploy an internal agent that uses Vision RAG to analyze text, charts, and diagrams, providing instant, accurate answers to employee queries without compromising proprietary data security.

Automated Customer Support Agents: Build knowledge bases instantly by providing website documentation URLs and ingesting support tickets. Deploy a customer-facing agent that can intelligently answer complex questions, reducing the load on human support teams while maintaining high-quality, up-to-date responses.

Event-Driven Workflow Automation: Utilize webhook triggers, scheduled tasks, and API bindings to create proactive agents. For example, deploy an agent that monitors system logs (via webhooks), performs multi-step analysis across different APIs, and automatically generates summary reports or initiates remediation scripts based on the findings.

Why Choose HelixML?

Choosing HelixML means prioritizing security, control, and efficiency in your GenAI strategy. We provide the architectural depth necessary for mission-critical applications.

Guaranteed Data Security and Sovereignty: Unlike managed cloud services, Helix is designed for deployment in your own data center or VPC. This architecture ensures all data, prompts, and inference results remain within your secured perimeter, meeting stringent regulatory and compliance requirements.

Maximum Operational Efficiency: The microservices architecture and intelligent GPU model scheduler work together to ensure that your compute resources are utilized optimally. This dynamic approach minimizes idle GPU time and scales efficiently under variable load, leading to substantial cost savings.

LLM Provider Agnostic Flexibility: Helix supports major commercial LLM providers (OpenAI, Anthropic) alongside local, self-hosted models. This multi-provider capability future-proofs your applications, allowing you to easily switch or combine models based on performance, cost, and availability without rebuilding your core agent logic.

Conclusion

HelixML provides the secure foundation and sophisticated tools you need to move beyond basic LLM wrappers and deploy true enterprise-grade AI agents. Leverage its declarative configuration, deep observability, and private architecture to build powerful, context-aware solutions with confidence and complete control.

Explore how HelixML can help you secure and scale your next generation of AI applications today.

More information on HelixML

Top 5 Countries

Traffic Sources

HelixML Alternatives

HelixML Alternatives-

Build private GenAI apps with HelixML. Control your data & models with our self-hosted platform. Deploy on-prem, VPC, or our cloud.

-

Easily monitor, debug, and improve your production LLM features with Helicone's open-source observability platform purpose-built for AI apps.

-

Helicone AI Gateway: Unify & optimize your LLM APIs for production. Boost performance, cut costs, ensure reliability with intelligent routing & caching.

-

TaskingAI brings Firebase's simplicity to AI-native app development. Start your project by selecting an LLM model, build a responsive assistant supported by stateful APIs, and enhance its capabilities with managed memory, tool integrations, and augmented generation system.

-

LlamaIndex builds intelligent AI agents over your enterprise data. Power LLMs with advanced RAG, turning complex documents into reliable, actionable insights.