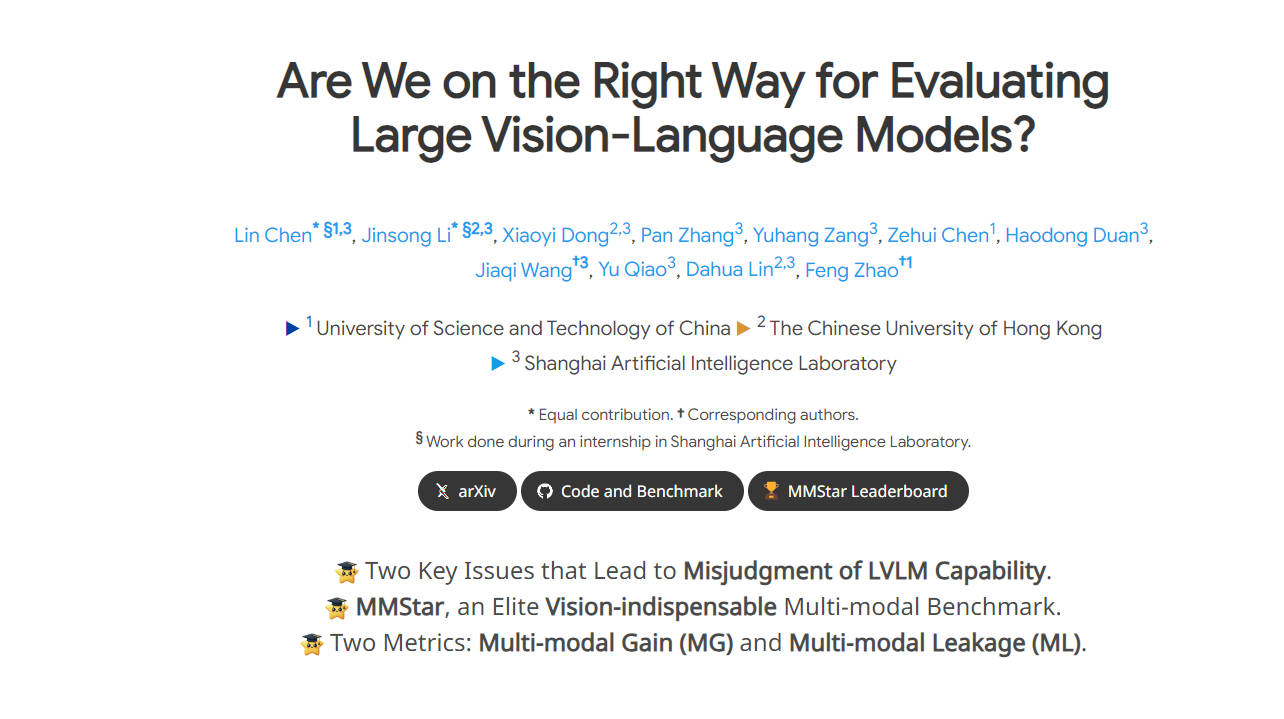

What is MMStar?

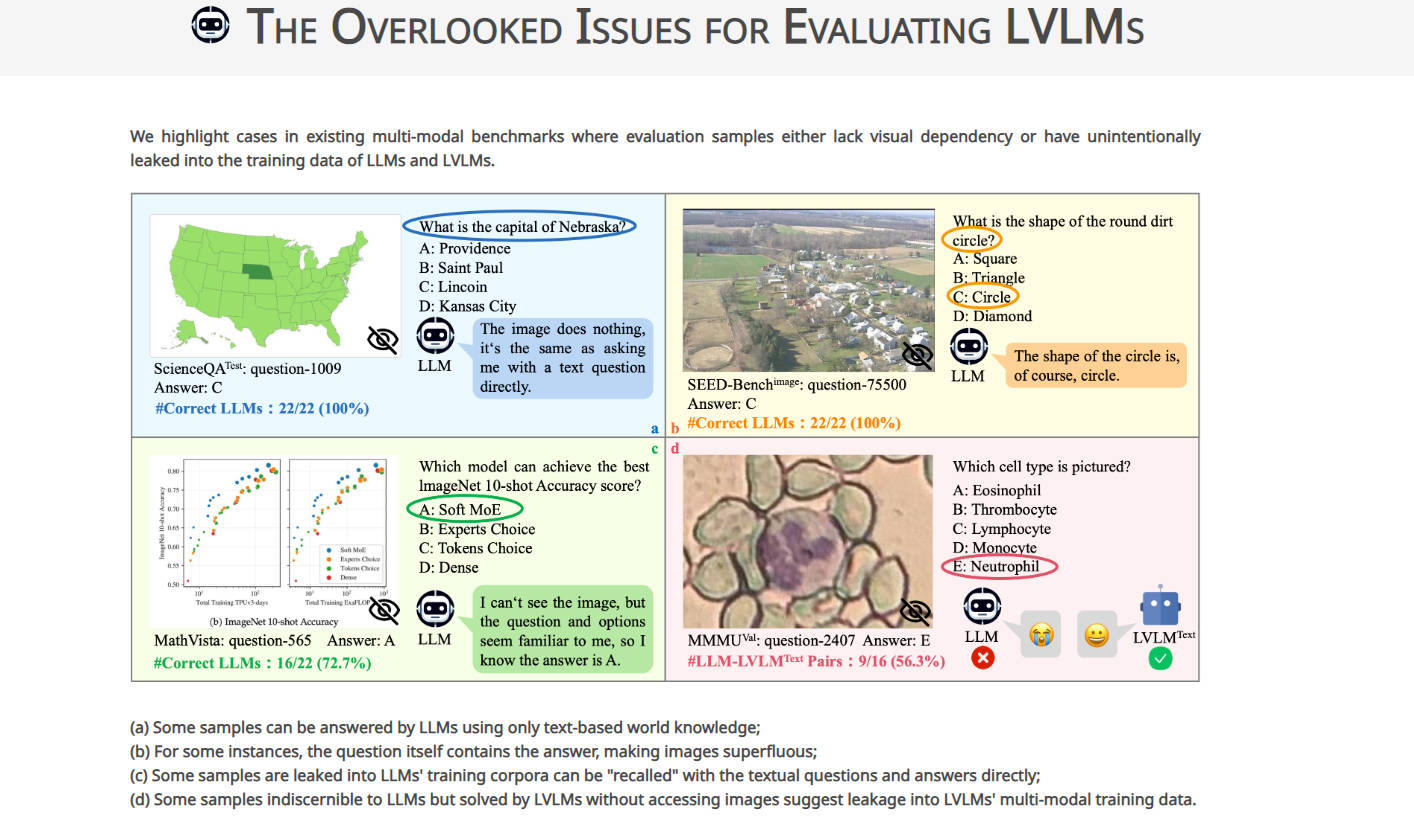

MMStar is a groundbreaking benchmark designed to address key issues in evaluating Large Vision-Language Models (LVLMs). It meticulously selects challenge samples to assess LVLMs' multi-modal capabilities, aiming to eliminate data leakage and accurately measure performance gains. By providing a balanced and purified set of samples, MMStar enhances the credibility of LVLM evaluation, offering valuable insights for the research community.

Key Features:

Meticulously Selected Samples:MMStar comprises 1,500 challenge samples meticulously chosen to exhibit visual dependency and advanced multi-modal capabilities. 🎯

Comprehensive Evaluation:MMStar evaluates LVLMs on 6 core capabilities and 18 detailed axes, ensuring a thorough assessment of multi-modal performance. 🏆

Novel Evaluation Metrics:In addition to traditional accuracy metrics, MMStar introduces two metrics to measure data leakage and actual performance gain in multi-modal training, providing deeper insights into LVLM capabilities. 📊

Use Cases:

Academic Research:Researchers can use MMStar to accurately evaluate the multi-modal capabilities of LVLMs, guiding further advancements in the field.

Model Development:Developers can leverage MMStar to identify areas for improvement in LVLMs and refine their models for enhanced multi-modal performance.

Benchmark Comparison:MMStar enables comparative analysis of LVLMs' performance across different benchmarks, facilitating informed decision-making in model selection.

Conclusion:

MMStar revolutionizes the evaluation of Large Vision-Language Models by addressing critical issues of data leakage and performance measurement. With its meticulously selected samples and novel evaluation metrics, MMStar empowers researchers and developers to make informed decisions and drive advancements in multi-modal AI technology. Join us in embracing MMStar to unlock the full potential of LVLMs and propel the field forward.

More information on MMStar

Top 5 Countries

Traffic Sources

MMStar Alternatives

MMStar Alternatives-

OpenMMLab is an open-source platform that focuses on computer vision research. It offers a codebase

-

With a total of 8B parameters, the model surpasses proprietary models such as GPT-4V-1106, Gemini Pro, Qwen-VL-Max and Claude 3 in overall performance.

-

Cambrian-1 is a family of multimodal LLMs with a vision-centric design.

-

StarCoder and StarCoderBase are Large Language Models for Code (Code LLMs) trained on permissively licensed data from GitHub, including from 80+ programming languages, Git commits, GitHub issues, and Jupyter notebooks.

-

GLM-4.5V: Empower your AI with advanced vision. Generate web code from screenshots, automate GUIs, & analyze documents & video with deep reasoning.