What is Phi-3 Mini-128K-Instruct ONNX?

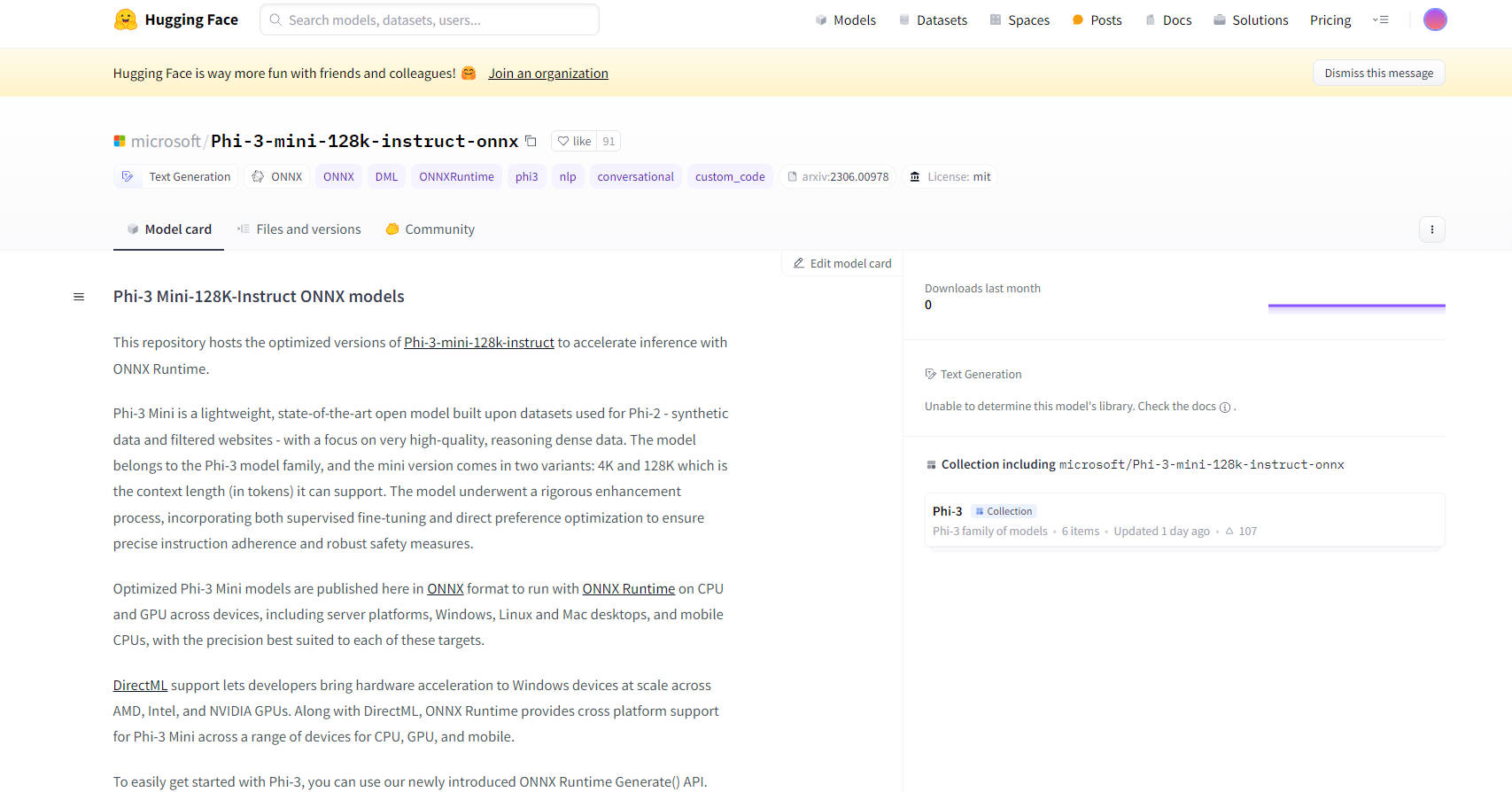

Microsoft’s Phi-3 Mini-128K-Instruct ONNX Models represent a cutting-edge advancement in open-source AI technology. These models are designed to enhance inference capabilities with ONNX Runtime, offering optimized performance across various platforms and devices, including CPUs, GPUs, and mobile devices. The Phi-3 Mini models, specifically the 128K variant, are tailored for high-quality, reasoning-dense data processing, making them ideal for complex tasks requiring robust instruction adherence and safety measures.

Key Features:

Optimized Performance:The models are available in ONNX format, ensuring they run efficiently with ONNX Runtime on a range of devices, from servers to mobile phones. This optimization includes support for DirectML, enabling hardware acceleration on Windows devices with AMD, Intel, and NVIDIA GPUs.

Cross-Platform Compatibility:With support for Windows, Linux, and Mac desktops, as well as mobile CPUs, Phi-3 Mini-128K-Instruct ONNX Models provide extensive cross-platform compatibility, making them versatile for various applications.

Advanced Quantization Techniques:The models utilize Activation Aware Quantization (AWQ), which selectively quantizes the least salient 99% of weights to maintain accuracy, resulting in minimal accuracy loss compared to traditional quantization methods.

Ease of Integration:A new ONNX Runtime Generate() API simplifies the integration of Phi-3 Mini models into applications, allowing developers to easily incorporate large language models (LLMs) into their software.

Comprehensive Documentation and Support:Detailed documentation and community support are available, including instructions on getting started with the models, ensuring a smooth development process for users.

Use Cases:

Conversational AI:Ideal for chatbots and virtual assistants, the Phi-3 Mini-128K-Instruct ONNX Models can handle complex queries and maintain coherent, context-aware conversations.

Text Generation:The models excel in generating high-quality, contextually relevant text, useful for content creation, summarization, and more.

Natural Language Processing (NLP):These models are well-suited for various NLP tasks, including language translation, sentiment analysis, and information extraction.

Target Audience:

AI Developers and Researchers:Ideal for those working on NLP projects, AI research, and developing smart applications.

Enterprises:Suitable for businesses looking to integrate advanced AI capabilities into their products or services, particularly in areas like customer service and content generation.

Educational Institutions:Beneficial for academic research and teaching advanced AI and machine learning concepts.

Hardware Requirements:

Minimum Configuration:DirectX 12-capable GPU with at least 4GB of combined RAM for Windows, and SMs >= 70 for CUDA (e.g., V100 or newer).

Tested Hardware:Includes RTX 4090 (DirectML), 1 A100 80GB GPU, Standard F64s v2 (64 vcpus, 128 GiB memory), and Samsung Galaxy S21.

Performance Metrics:

The models demonstrate superior performance in ONNX Runtime compared to PyTorch, with up to 5X faster performance in FP16 CUDA and up to 9X faster in INT4 CUDA.

Conclusion:

Microsoft’s Phi-3 Mini-128K-Instruct ONNX Models represent a significant leap forward in AI model optimization and deployment. Their versatility, ease of integration, and high performance make them an excellent choice for a wide range of applications, from conversational AI to complex NLP tasks. With support for multiple platforms and advanced quantization techniques, these models offer both power and flexibility for developers and businesses in the AI space.

More information on Phi-3 Mini-128K-Instruct ONNX

Phi-3 Mini-128K-Instruct ONNX Alternatives

Load more Alternatives-

ONNX Runtime: Run ML models faster, anywhere. Accelerate inference & training across platforms. PyTorch, TensorFlow & more supported!

-

Phi-2 is an ideal model for researchers to explore different areas such as mechanistic interpretability, safety improvements, and fine-tuning experiments.

-

-

MiniCPM3-4B is the 3rd generation of MiniCPM series. The overall performance of MiniCPM3-4B surpasses Phi-3.5-mini-Instruct and GPT-3.5-Turbo-0125, being comparable with many recent 7B~9B models.

-

Gemma 3 270M: Compact, hyper-efficient AI for specialized tasks. Fine-tune for precise instruction following & low-cost, on-device deployment.