What is Plano ?

Building an AI agent prototype is often straightforward, but delivering that agent to a production environment is notoriously complex. Developers frequently get bogged down building "hidden AI middleware"—the repetitive plumbing required for routing, security guardrails, and observability that usually litters application code.

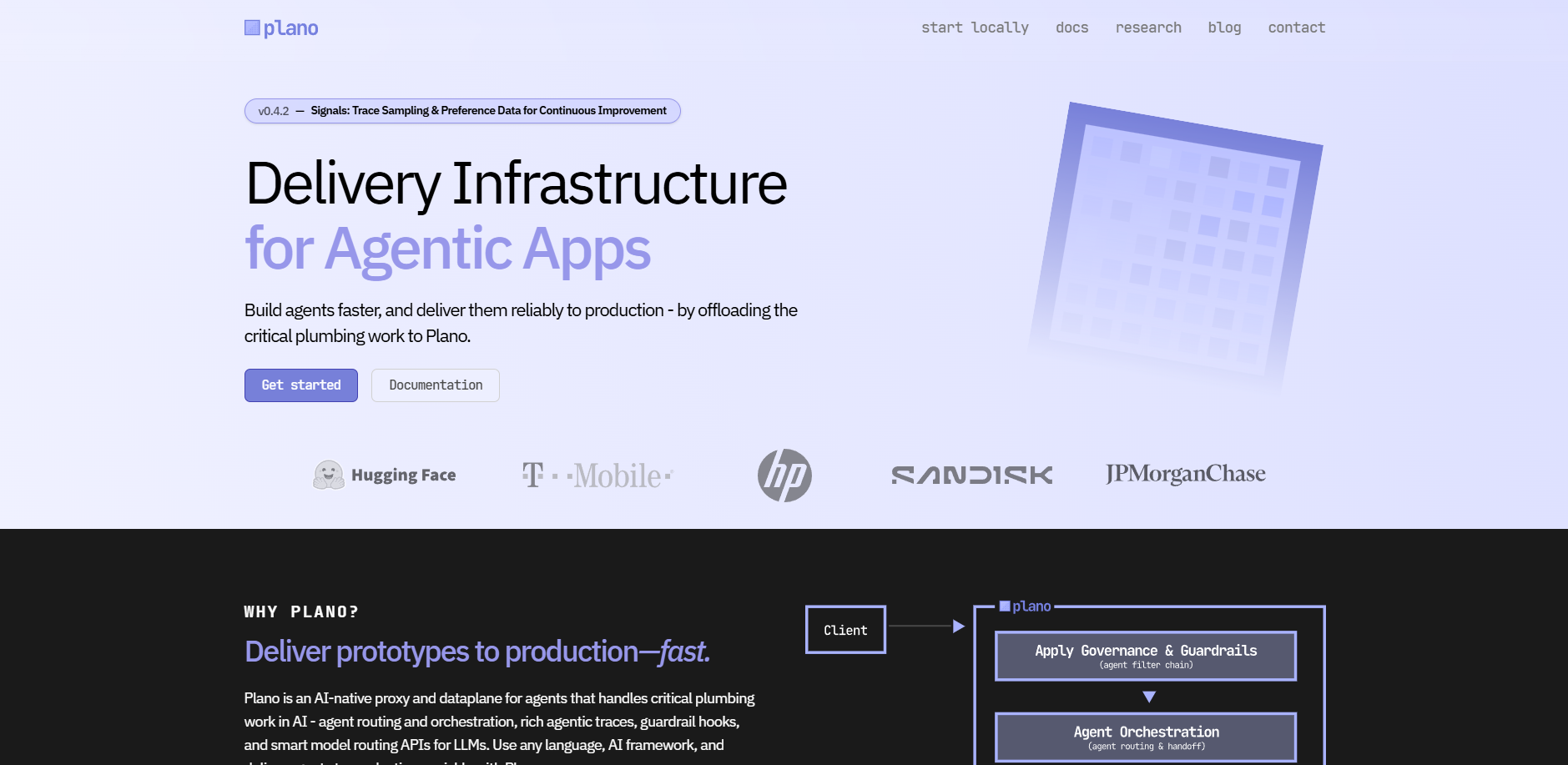

Plano is an AI-native delivery infrastructure and data plane designed to offload this critical plumbing. By acting as a professional-grade proxy between your application and your LLMs, Plano centralizes orchestration, security, and monitoring. This allows your team to focus on refining agent logic and user experience rather than managing infrastructure overhead.

Key Features

- 🚦 Framework-Agnostic Orchestration: Plano handles low-latency routing between multiple agents and LLMs without requiring changes to your application code. By moving orchestration into a centralized data plane, you can evolve your routing strategies and add new agents without the risk of tight coupling or code duplication.

- 🛡️ Centralized Guardrails & Filters: Protect your applications with built-in jailbreak protection, content policies, and context workflows. These "Filter Chains" are applied at the data plane level, ensuring consistent security and governance across your entire stack without re-implementing logic in every service.

- 🔗 Provider-Agnostic Model Agility: Route requests by model name, semantic alias, or automatic preference to stay decoupled from specific providers. Plano’s smart routing and unified API allow you to swap or add models instantly, handling retries and failovers automatically to ensure continuous availability.

- 🕵 Zero-Code Agentic Signals™: Capture detailed behavior traces, token usage, and performance metrics across every interaction automatically. Built on OpenTelemetry and W3C standards, Plano provides deep visibility into agent performance and latency (TFT/TOT) without requiring manual instrumentation.

- 🏗️ Protocol-Native Sidecar Architecture: Built on the battle-tested Envoy Proxy, Plano runs as a self-contained process alongside your application. This sidecar model avoids the "library tax," allowing it to work with any programming language—including Python, Java, and Go—while scaling linearly with your traffic.

Use Cases

Scaling Multi-Agent Workflows In a complex system where different agents handle coding, research, and data entry, Plano acts as the traffic controller. It analyzes user intent and conversation context to route the request to the most appropriate agent or sequence of agents, ensuring the highest accuracy for the specific task at hand.

Standardizing Enterprise Security For engineering teams operating in regulated environments, Plano provides a single point of control for safety policies. You can apply redaction, retrieval hooks, and jailbreak filters across every agentic interaction in the company, ensuring compliance is enforced at the infrastructure level rather than relying on individual developer implementation.

Rapid Model Benchmarking and Migration If a new, more cost-effective LLM is released, you can use Plano to redirect a percentage of your traffic to the new provider for testing. Because your application communicates with Plano’s unified API, you can swap backend providers or update model versions in the configuration file without refactoring a single line of application code.

Why Choose Plano?

Plano distinguishes itself by moving away from the fragile, library-based abstractions that currently dominate the AI landscape.

- Production-Grade Foundation: While many tools are built as wrappers around specific AI frameworks, Plano is built on Envoy Proxy, the same technology used by companies like Google, Stripe, and Netflix to handle massive-scale traffic.

- Task-Specific LLMs (TLMs): Unlike standard proxies, Plano utilizes specialized, high-efficiency models (like Plano-Orchestrator) specifically engineered for fast, accurate routing and function calling.

- Operational Decoupling: Because Plano is out-of-process, you can upgrade your AI infrastructure, change security policies, or update model routing independently of your application’s deployment cycle. This reduces the "horrid pain" of managing library dependencies across multiple microservices.

Conclusion

Plano transforms the way teams move from AI experiments to reliable, production-scale applications. By standardizing the "hidden middleware" of agentic apps, it provides the stability and observability required for professional software delivery. You gain the freedom to iterate on your agents' core intelligence while relying on a hardened, scalable foundation to handle the complexities of the modern AI stack.