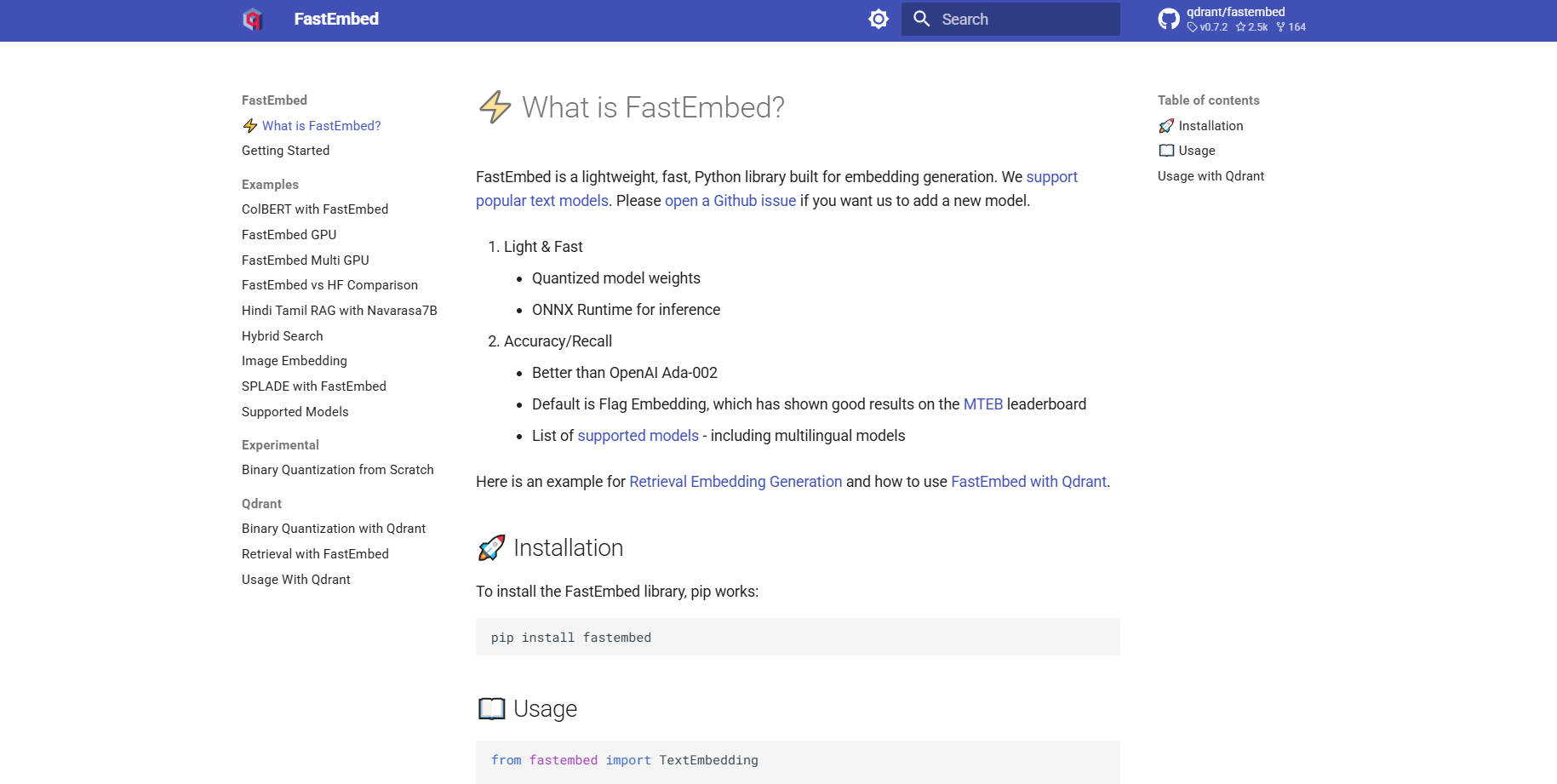

What is FastEmbed?

FastEmbed is a powerful yet remarkably lightweight Python library engineered specifically for high-speed, accurate embedding generation across diverse modalities. It solves the common performance and dependency bloat issues associated with traditional deep learning libraries by leveraging the efficient ONNX Runtime. Designed for developers and MLOps teams, FastEmbed delivers production-ready vector embeddings quickly, making it the ideal foundation for building robust Retrieval-Augmented Generation (RAG) systems and efficient serverless applications.

Key Features

FastEmbed is built to handle the complexity of modern RAG pipelines, offering robust support for various embedding types and optimization techniques while maintaining a minimal footprint.

⚡️ Optimized Text Embedding (Dense & Sparse)

FastEmbed provides support for generating dense vectors using popular text models, with highly performant Flag Embedding serving as the default choice. Crucially, it also supports sparse embeddings like SPLADE++, giving you the flexibility to choose the optimal retrieval strategy—whether focusing on high-density semantic similarity or efficient keyword matching—and allows for seamless integration of custom models.

🖼️ Comprehensive Multimodal Support

Expand your retrieval capabilities beyond text. FastEmbed incorporates specialized support for generating image embeddings (e.g., using CLIP models) and advanced Late Interaction Multimodal Models (ColPali). This allows you to unify indexing across images and text queries, enabling complex multimodal search within a single framework.

🚀 Performance Acceleration via ONNX Runtime

Unlike libraries that rely on heavy PyTorch dependencies, FastEmbed utilizes the streamlined ONNX Runtime. This technical choice drastically reduces the library size, minimizes external dependencies, and accelerates execution speed, ensuring faster initialization and superior throughput compared to conventional embedding pipelines.

⚙️ Built-in Reranking and Interaction Models

Improve the precision of your search results using the integrated TextCrossEncoder utility, which supports state-of-the-art reranking models. Furthermore, the library supports Late Interaction Models (like ColBERT), allowing for more nuanced, context-aware scoring between queries and retrieved passages post-retrieval.

📈 Scalable Data Parallelism

For users dealing with massive datasets, FastEmbed automatically employs data parallelism when encoding large batches of text. This feature ensures high-throughput processing and efficient utilization of available resources, significantly speeding up the initial vectorization and indexing phases of large-scale projects.

Use Cases

FastEmbed is optimized for environments where performance, size, and accuracy cannot be compromised.

1. High-Efficiency Serverless Deployment

FastEmbed's lightweight architecture and reliance on the ONNX Runtime make it an excellent candidate for serverless environments, such as AWS Lambda. You can deploy embedding generation services as microservices without worrying about multi-gigabyte dependency packages, ensuring rapid cold starts and cost-effective scaling.

2. Rapid Indexing for Enterprise RAG Systems

When migrating or updating a large corporate knowledge base, you need speed. Use FastEmbed’s data parallelism capabilities to rapidly encode millions of documents into vectors. By integrating directly with vector databases (like the Qdrant client), you can quickly populate collections with high-quality, optimized embeddings, accelerating the time-to-deployment for your RAG applications.

3. Advanced Multimodal Search

If your application requires searching across documents containing both text and images (e.g., technical manuals or product catalogs), FastEmbed provides the necessary tools. Leverage ColPali models to generate distinct, yet related, embeddings for visual and textual data, enabling sophisticated queries that span both modalities to deliver highly relevant results.

Why Choose FastEmbed?

FastEmbed is maintained by Qdrant and built specifically to overcome the common bottlenecks faced by developers building production-ready vector search applications.

Unmatched Efficiency (Lightweight Footprint): FastEmbed avoids the heavy dependency load of typical deep learning frameworks. By using the ONNX Runtime and requiring few external packages, it drastically reduces library size, making it highly portable and ideal for resource-constrained environments.

Superior Speed and Throughput: Leveraging ONNX and optimized data parallelism, FastEmbed is designed for speed. This focus on performance translates directly into faster query response times and higher throughput for batch processing, saving valuable computational time.

Proven Accuracy and Model Diversity: FastEmbed supports an ever-expanding set of high-quality models, including multilingual options. Crucially, its default models are benchmarked to deliver accuracy superior to established commercial baselines, such as OpenAI Ada-002, ensuring your retrieval systems are built on precise semantic data.

Production-Ready Integration: With optional support for GPU acceleration (via

fastembed-gpu) and seamless, supported integration with Qdrant, Langchain, and Llama Index, FastEmbed is ready to be deployed as a core component of enterprise-grade AI infrastructure.

Conclusion

FastEmbed provides the critical balance of speed, size, and accuracy required for modern vector search and RAG applications. By optimizing performance through the ONNX runtime and offering robust support for diverse models and modalities, it simplifies the embedding pipeline and ensures reliable, high-quality results.

More information on FastEmbed

FastEmbed Alternatives

Load more Alternatives-

Embedchain: The open-source RAG framework to simplify building & deploying personalized LLM apps. Go from prototype to production with ease & control.

-

Snowflake Arctic embed: High-performance, efficient open-source text embeddings for RAG & semantic search. Improve AI accuracy & cut costs.

-

EmbeddingGemma: On-device, multilingual text embeddings for privacy-first AI apps. Get best-in-class performance & efficiency, even offline.

-

Superlinked is a Python framework for AI Engineers building high-performance search & recommendation applications that combine structured and unstructured data.

-

Infinity is a cutting-edge AI-native database that provides a wide range of search capabilities for rich data types such as dense vector, sparse vector, tensor, full-text, and structured data. It provides robust support for various LLM applications, including search, recommenders, question-answering, conversational AI, copilot, content generation, and many more RAG (Retrieval-augmented Generation) applications.