What is Ludwig?

Building, training, and deploying custom AI models like Large Language Models (LLMs) or specialized neural networks often requires navigating complex codebases and managing intricate infrastructure. Ludwig offers a different path. It’s a declarative, low-code framework that streamlines the entire process, letting you focus on the modeling itself, rather than getting bogged down in engineering complexities. Whether you're fine-tuning an existing LLM or designing a unique multi-modal architecture from scratch, Ludwig provides the tools to build sophisticated models with remarkable ease.

Key Features

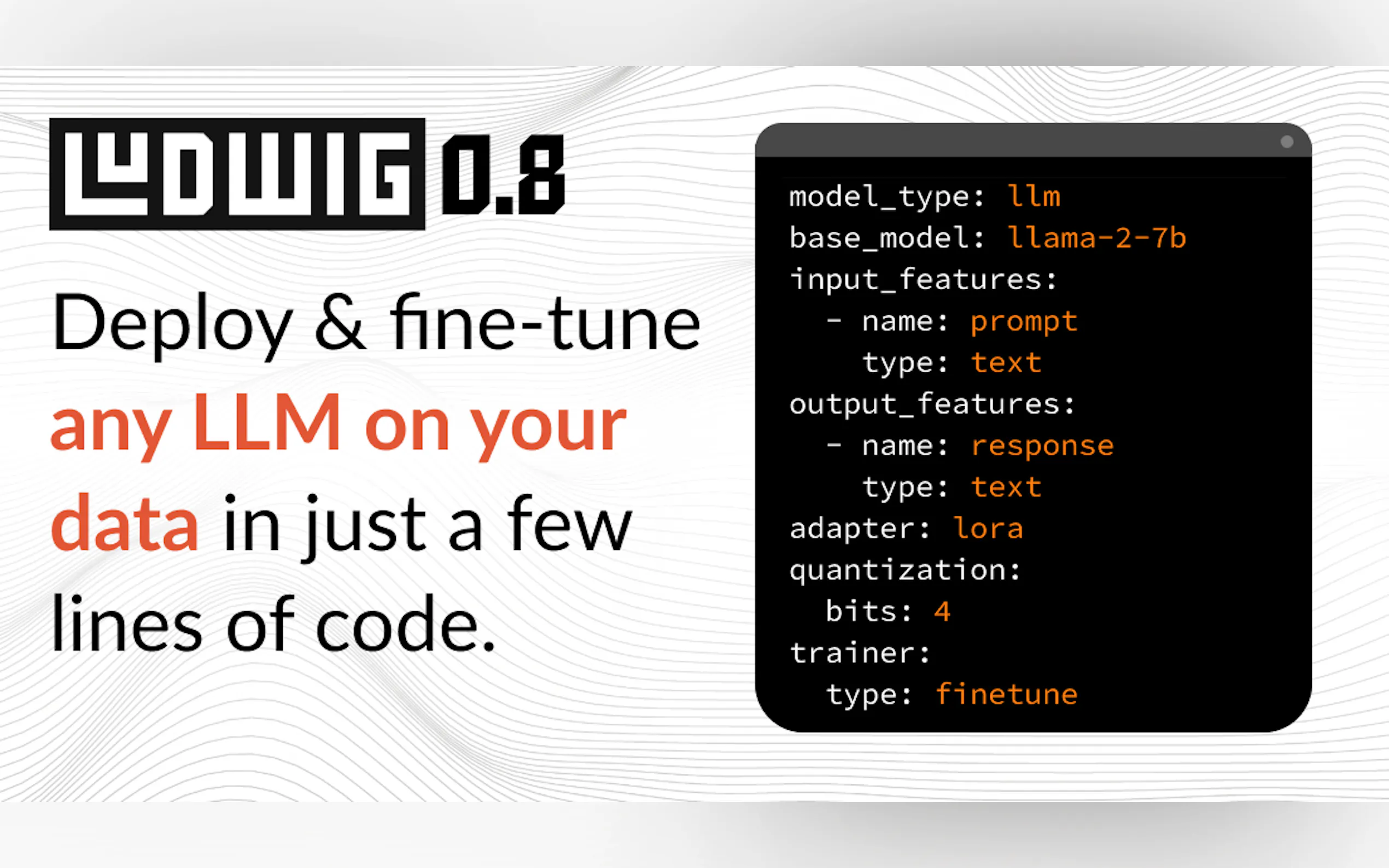

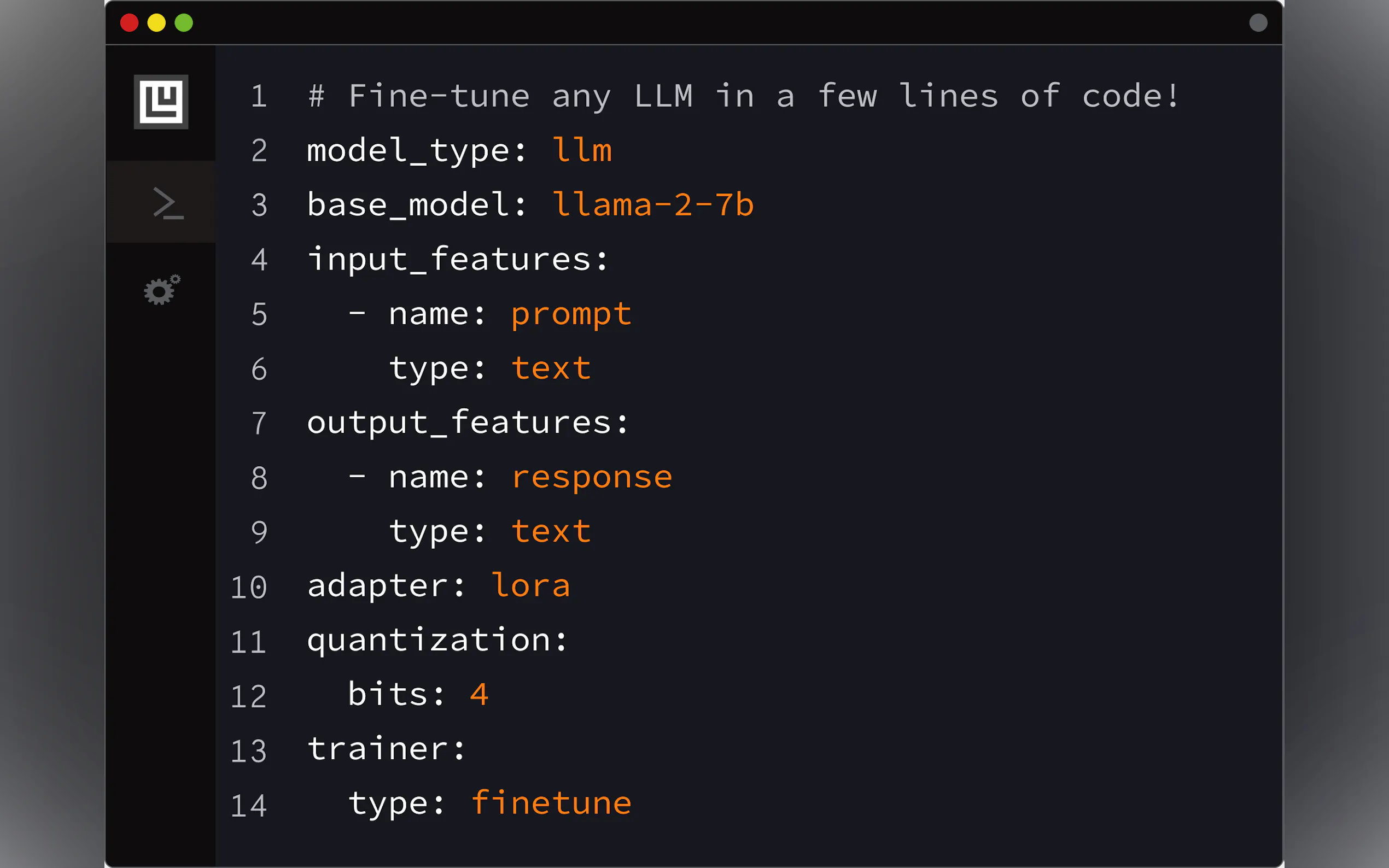

🛠️ Build Custom Models via Configuration: Define your entire model architecture, features, and training process using a simple, declarative YAML file. Ludwig handles the underlying code generation, supporting multi-task and multi-modal learning seamlessly. Its comprehensive validation checks catch errors before runtime.

⚡ Optimized for Scale & Efficiency: Train large models effectively. Ludwig incorporates automatic batch size tuning, distributed training (DDP, DeepSpeed), Parameter Efficient Fine-Tuning (PEFT) methods like LoRA and QLoRA (4-bit quantization), paged optimizers, and support for larger-than-memory datasets via Ray integration.

📐 Retain Expert-Level Control: Low-code doesn't mean low-control. You can specify everything down to activation functions. Ludwig integrates hyperparameter optimization (HPO) tools, provides explainability methods (feature importance, counterfactuals), and generates rich visualizations for evaluating model metrics.

🧱 Experiment Rapidly with Modularity: Think of Ludwig as deep learning building blocks. Its modular design lets you swap encoders, combiners, and decoders, experiment with different tasks, features, or modalities by making small changes in your configuration file, facilitating rapid iteration.

🚢 Engineered for Production: Go from experimentation to deployment smoothly. Ludwig offers prebuilt Docker containers, native support for Ray on Kubernetes, and allows you to export models to standard formats like Torchscript and Triton Inference Server. You can even upload trained models directly to the HuggingFace Hub with a single command.

Use Cases: Putting Ludwig into Practice

Fine-tuning an LLM for Specific Tasks: Imagine you need a chatbot tailored to your company's internal documentation. Using Ludwig, you can take a pre-trained model like Llama-2 or Mistral-7b, define a simple YAML config specifying your data (instruction/response pairs), set up 4-bit quantization (QLoRA) for efficiency, and launch the fine-tuning process with a single command (

ludwig train). This allows you to create a specialized, instruction-following LLM on consumer-grade GPUs (like an Nvidia T4) without writing extensive training code.Building a Multi-Modal Classifier: Suppose you want to predict product recommendation success based on text reviews, product category (set), content rating (category), runtime (number), and critic status (binary). With Ludwig, you define each of these diverse input types in your YAML configuration, specify their respective types (

text,set,category,number,binary), choose appropriate encoders (e.g.,embedfor text), and define the binary output feature (recommended). Ludwig automatically constructs the appropriate network architecture to handle these mixed data types and trains the classifier.Rapid Prototyping and Deployment: You have a dataset and need to quickly build and deploy a classification model. Using Ludwig's AutoML feature (

ludwig.automl.auto_train), you can simply provide the dataset, target variable, and a time limit. Ludwig explores different model configurations and hyperparameters to find a high-performing model. Once trained, you can immediately launch a REST API for your model usingludwig serve, making it instantly available for predictions.

Conclusion

Ludwig significantly lowers the barrier to entry for building sophisticated, custom AI models. By replacing complex boilerplate code with a declarative configuration system, it accelerates experimentation and development. Its built-in optimizations ensure you can train efficiently at scale, while its production-ready features simplify deployment. If you're looking to fine-tune LLMs, build multi-modal systems, or simply iterate faster on your deep learning projects, Ludwig provides a powerful and accessible framework.

More information on Ludwig

Top 5 Countries

Traffic Sources

Ludwig Alternatives

Ludwig Alternatives-

LazyLLM: Low-code for multi-agent LLM apps. Build, iterate & deploy complex AI solutions fast, from prototype to production. Focus on algorithms, not engineering.

-

LM Studio is an easy to use desktop app for experimenting with local and open-source Large Language Models (LLMs). The LM Studio cross platform desktop app allows you to download and run any ggml-compatible model from Hugging Face, and provides a simple yet powerful model configuration and inferencing UI. The app leverages your GPU when possible.

-

LLaMA Factory is an open-source low-code large model fine-tuning framework that integrates the widely used fine-tuning techniques in the industry and supports zero-code fine-tuning of large models through the Web UI interface.

-

LlamaFarm: Build & deploy production-ready AI apps fast. Define your AI with configuration as code for full control & model portability.

-

Transformer Lab: An open - source platform for building, tuning, and running LLMs locally without coding. Download 100s of models, finetune across hardware, chat, evaluate, and more.