What is Qwen2.5-Turbo?

Introducing Qwen2.5-Turbo, a cutting-edge large language model developed by Alibaba Cloud. This advanced model boasts an unprecedented context window of 1 million tokens, equivalent to roughly 10 novels, 150 hours of transcribed speech, or 30,000 lines of code. Qwen2.5-Turbo excels at processing ultra-long texts while maintaining impressive performance on short text tasks. It outperforms competitors like GPT-4 in long text benchmarks and offers faster inference speed and lower cost.

Key Features:

📖 Extended Context Window:Handles an astounding 1 million tokens, enabling deeper comprehension and analysis of extensive texts like novels, code repositories, and research papers.

🚄 Faster Inference Speed:Sparse attention mechanisms significantly reduce processing time, making it 4.3x faster than its predecessor when handling 1 million tokens.

💰 Lower Cost:Offers cost-effective processing at ¥0.3 per 1 million tokens, allowing users to analyze 3.6x more content compared to GPT-4o-mini for the same price.

🎯 Strong Performance Across Tasks:Excels in both long and short text tasks, surpassing other open-source models with similar context lengths and achieving comparable performance to GPT-4o-mini and Qwen2.5-14B-Instruct on shorter texts.

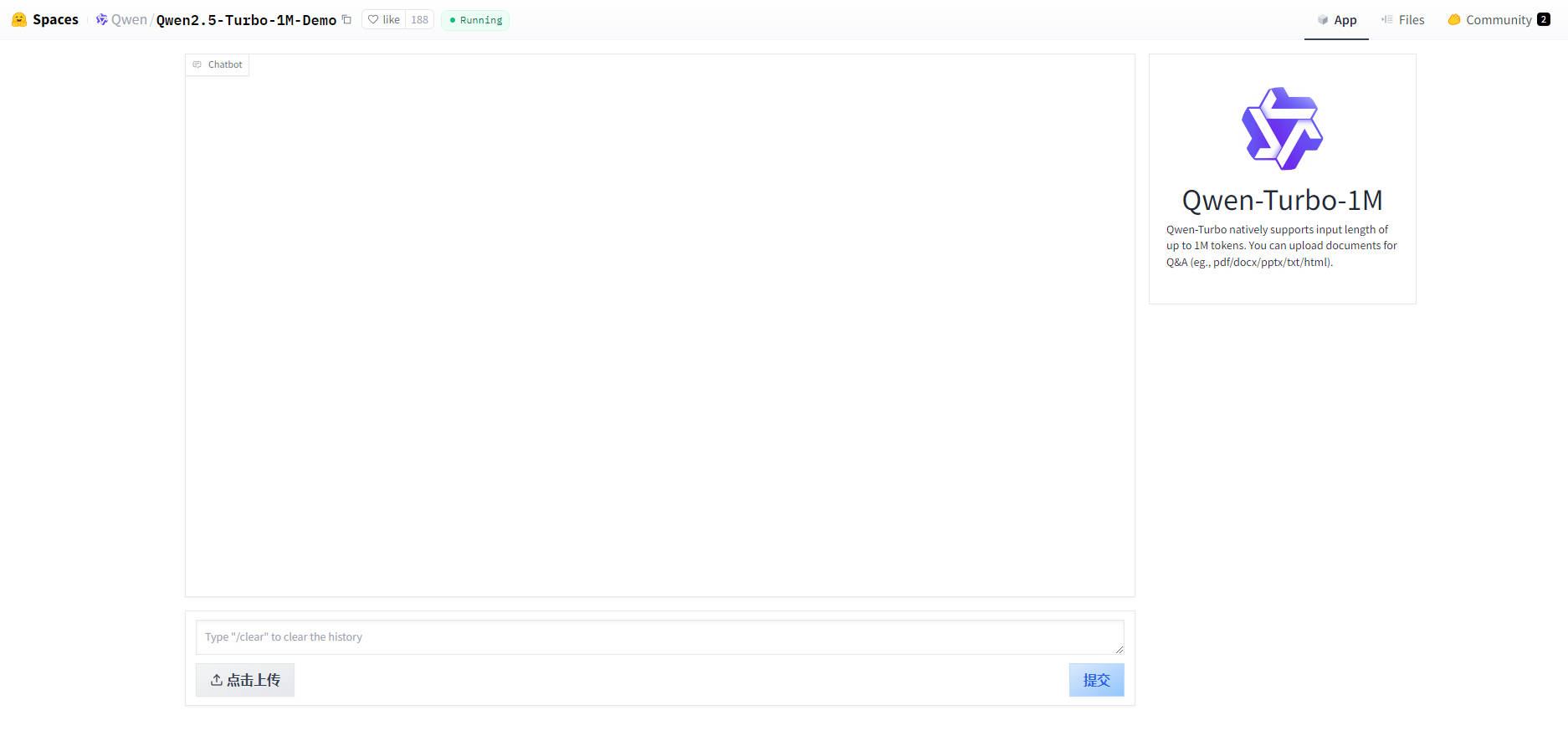

🌐 Accessible Through Multiple Platforms:Available via Alibaba Cloud Model Studio API, HuggingFace Demo, and ModelScope Demo for seamless integration and experimentation.

Use Cases:

Summarize complex research papers across various disciplines.

Analyze and extract insights from lengthy legal documents or contracts.

Build advanced conversational AI that can maintain context over extended interactions.

Conclusion:

Qwen2.5-Turbo opens up new possibilities for AI applications requiring extensive context understanding. Its superior performance, efficiency, and affordability make it an ideal choice for researchers, developers, and businesses looking to leverage the power of large language models for tasks involving large volumes of text.

FAQs:

What is a token in the context of large language models?

A token can be a word, part of a word, or a punctuation mark. It's the basic unit of text processed by the model.

How does Qwen2.5-Turbo handle text longer than 1 million tokens?

Currently, the model is limited to 1 million tokens. For longer texts, users might need to break them down into smaller chunks and process them separately.

Is Qwen2.5-Turbo available for commercial use?

Refer to Alibaba Cloud's Model Studio documentation for details about licensing and terms of service for commercial use.

More information on Qwen2.5-Turbo

Qwen2.5-Turbo Alternatives

Qwen2.5-Turbo Alternatives-

Qwen2 is the large language model series developed by Qwen team, Alibaba Cloud.

-

Qwen2.5 series language models offer enhanced capabilities with larger datasets, more knowledge, better coding and math skills, and closer alignment to human preferences. Open-source and available via API.

-

CodeQwen1.5, a code expert model from the Qwen1.5 open-source family. With 7B parameters and GQA architecture, it supports 92 programming languages and handles 64K context inputs.

-

Qwen2-VL is the multimodal large language model series developed by Qwen team, Alibaba Cloud.

-

Qwen-MT delivers fast, customizable AI translation for 92 languages. Achieve precise, context-aware results with MoE architecture & API.