What is ZeroBench?

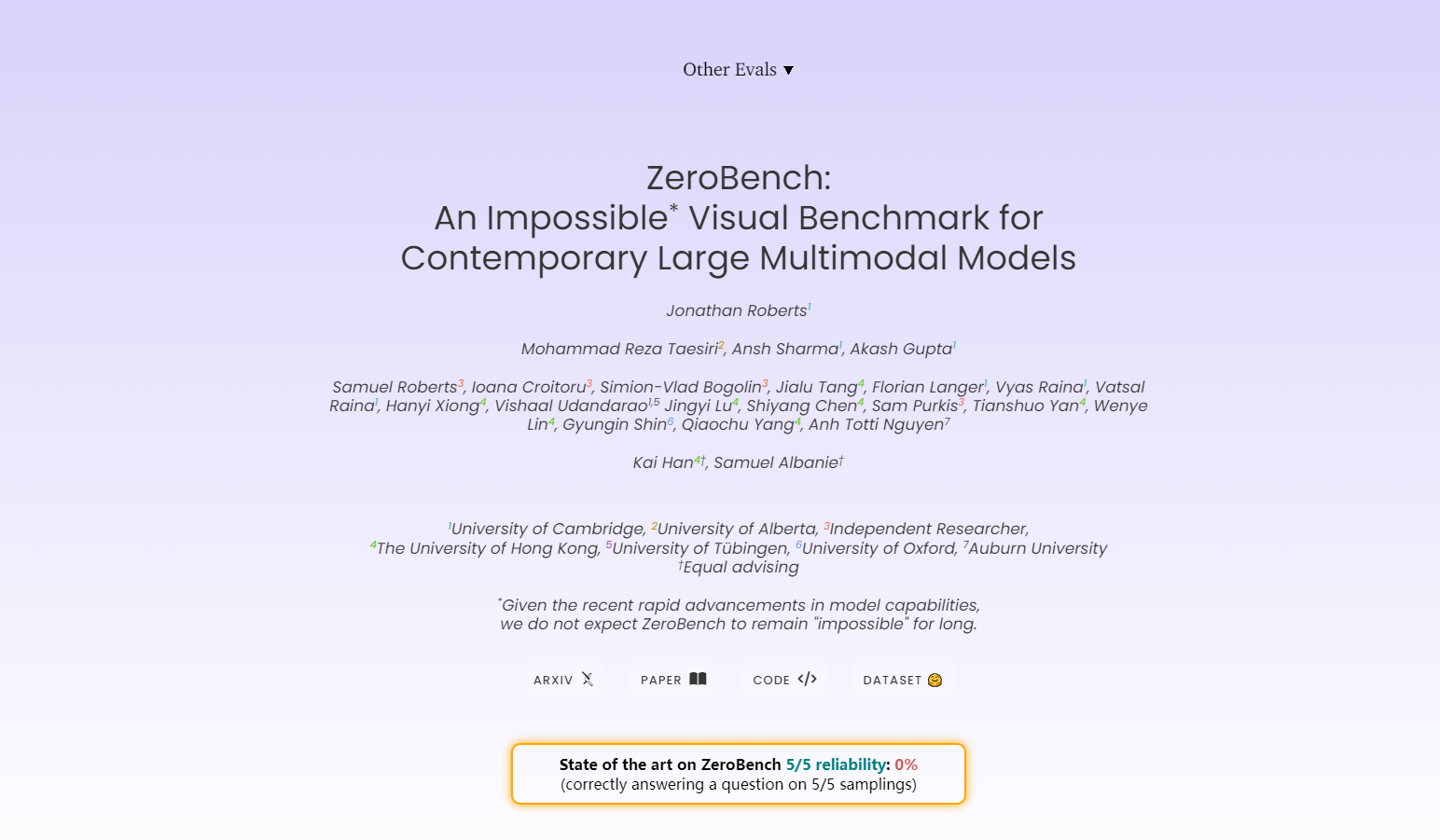

In the rapidly evolving field of multimodal models, performance on existing visual benchmarks often plateaus quickly, leaving little room to measure true advancements. ZeroBench steps in as a groundbreaking benchmark designed to challenge the capabilities of even the most advanced models. With 100 rigorously curated questions and 334 subquestions, ZeroBench evaluates visual reasoning, interpretation, and computational accuracy in ways no other benchmark can.

Key Features:

🔍 Challenging Questions: ZeroBench’s main questions are designed to test the limits of multimodal models, ensuring they can’t rely on memorization or simple pattern recognition.

📊 Subquestions for Granular Insights: Each main question is broken down into subquestions, allowing for detailed analysis of where models succeed or fail.

🌐 Diverse Scenarios: From chessboard analysis to maze navigation, ZeroBench covers a wide range of real-world and abstract visual reasoning tasks.

⚡ Lightweight Design: ZeroBench is optimized for efficient evaluation, minimizing computational overhead while maximizing insight.

✅ Human-Verified Quality: Every question and subquestion undergoes rigorous review to ensure accuracy and relevance.

Use Cases:

Model Development: Researchers can use ZeroBench to identify weaknesses in their multimodal models, guiding improvements in visual reasoning and computational accuracy.

Benchmarking: Compare the performance of different models on a truly challenging benchmark, ensuring fair and meaningful evaluation.

Training Data: ZeroBench’s subquestions can serve as targeted training data to enhance a model’s ability to break down complex visual tasks into manageable steps.

Conclusion:

ZeroBench isn’t just another benchmark—it’s a tool for pushing the boundaries of what multimodal models can achieve. By focusing on challenging, diverse, and high-quality questions, ZeroBench provides a clear picture of a model’s true capabilities. Whether you’re a researcher, developer, or enthusiast, ZeroBench offers the insights you need to drive innovation in multimodal AI.

FAQ:

Q: Who is ZeroBench designed for?

A: ZeroBench is ideal for researchers and developers working on multimodal models who want to rigorously test and improve their systems.

Q: How can I contribute to ZeroBench?

A: You can help by red teaming the benchmark to identify errors or by submitting new questions that align with ZeroBench’s standards.

Q: Is ZeroBench open-source?

A: Yes, the dataset is available on HuggingFace, and evaluation code is provided on GitHub for easy integration into your workflows.

Q: Why are the main questions so difficult?

A: The main questions are designed to push models beyond their current limits, ensuring the benchmark remains relevant as models evolve.

Q: How does ZeroBench handle data contamination?

A: Answers to example questions are intentionally excluded to prevent models from memorizing solutions, ensuring fair evaluation.