What is Quadric.io?

Quadric's Chimera GPNPU is a high-performance neural processing unit designed for on-device artificial intelligence computing. It simplifies SoC hardware design and software programming by running all types of machine learning networks, including classic backbones, vision transformers, and large language models. With the ability to handle matrix and vector operations as well as scalar code in one execution pipeline, the Chimera GPNPU offers faster time-to-market and efficient porting of new ML models.

Key Features:

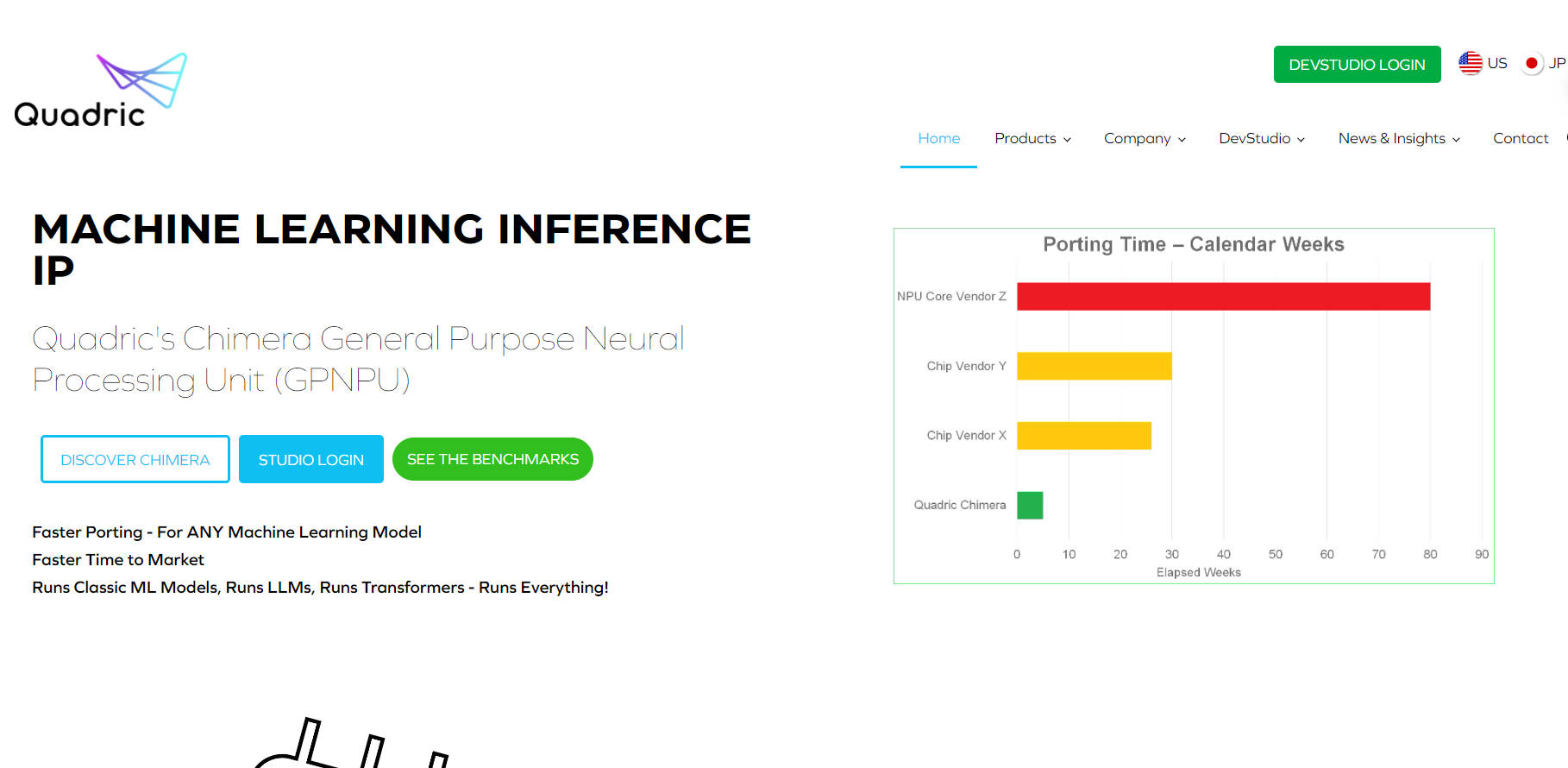

1. Faster Porting: The Quadric Chimera GPNPU enables faster porting of any machine learning model without the need to artificially partition application code between different processors.

2. Runs Everything: This licensable processor runs all kinds of models, from classic backbones to vision transformers and large language models (LLMs).

3. Scalability: The Chimera GPNPU scales from 1 to 16 TOPs in a single core, with multicore options scaling to over 100 TOPs.

Use Cases:

1. SoC Design Simplification: By offering one architecture for ML inference plus pre-and-post processing, the Chimera GPNPU simplifies system-on-chip (SoC) hardware design and software programming.

2. Fast Time-to-Market: The ability to quickly port new ML models allows developers to bring their products to market faster.

3. Versatile Model Support: From traditional backbone networks used in computer vision tasks to advanced transformer-based architectures for natural language processing, the Chimera GPNPU supports a wide range of machine learning applications.

Conclusion:

The Quadric Chimera GPNPU provides high-performance machine learning inference capabilities while streamlining SoC design and development processes. Its ability to run various types of ML models without artificial code partitioning makes it an attractive choice for developers looking for efficiency and flexibility in their AI-powered applications. With its scalability options ranging from single-core to multicore configurations, the Chimera GPNPU offers a powerful solution for accelerating AI computations on edge devices.

More information on Quadric.io

Top 5 Countries

Traffic Sources

Quadric.io Alternatives

Load more Alternatives-

Phi-3 Mini is a lightweight, state-of-the-art open model built upon datasets used for Phi-2 - synthetic data and filtered websites - with a focus on very high-quality, reasoning dense data.

-

Cerebras is the go-to platform for fast and effortless AI training and inference.

-

MiniCPM3-4B is the 3rd generation of MiniCPM series. The overall performance of MiniCPM3-4B surpasses Phi-3.5-mini-Instruct and GPT-3.5-Turbo-0125, being comparable with many recent 7B~9B models.

-

-