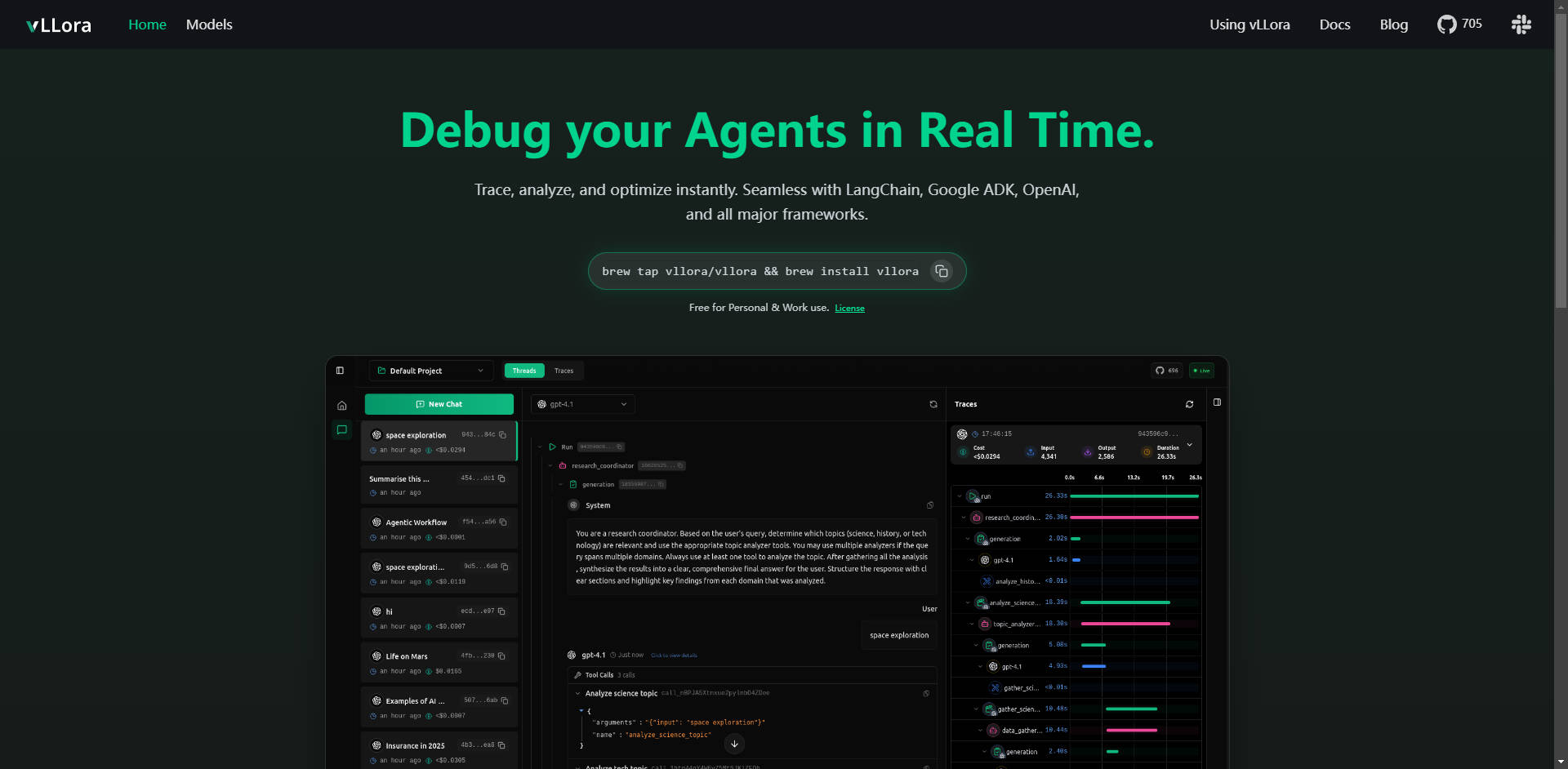

What is vLLora ?

vLLora is the essential, lightweight debugging and observability platform designed specifically for complex AI agent workflows. It immediately solves the critical challenge of gaining visibility into multi-step LLM calls, tool interactions, and agent logic. By integrating seamlessly with major frameworks via an OpenAI-compatible endpoint, vLLora empowers developers to trace, analyze, and optimize agent performance instantly, ensuring reliability and efficiency.

Key Features

vLLora provides the deep, real-time insights you need to move your AI agents from development to production with confidence.

🔍 Real-time Live Tracing

Monitor your AI agent interactions as they execute, providing live observability into the entire workflow. You see exactly what your agents are doing moment-by-moment—including every model call, tool interaction, and decision—allowing you to pinpoint errors or unexpected behavior the second they occur.

☁️ Universal Framework Compatibility

vLLora works out of the box with your existing setup, integrating seamlessly with industry-leading frameworks like LangChain, Google ADK, and OpenAI Agents SDK. This broad compatibility ensures you can implement deep debugging without extensive refactoring of your current codebase.

📈 Deep Observability Metrics

Go beyond simple logging. vLLora automatically collects crucial insights on performance, capturing detailed metrics on latency, operational cost, and raw model output for every step of the agent’s execution. This data is vital for identifying bottlenecks and optimizing resource allocation.

⚙️ OpenAI-Compatible Endpoints for Zero-Friction Setup

vLLora operates via an OpenAI-compatible chat completions API. By routing your agent’s calls through vLLora’s local server (http://localhost:9090), tracing and debugging information is collected automatically, making integration as simple as configuring a new endpoint URL.

🌐 Extensive Model Support and Benchmarking

Bring your own API keys and instantly gain access to over 300 different models. vLLora enables you to mix, match, and benchmark various models within a single agent workflow, allowing you to rapidly test configurations and select the most performant and cost-effective LLM for specific tasks.

Use Cases

vLLora is designed to enhance development velocity and operational reliability across various complex agent types:

1. Optimizing Coding and Automation Agents

When developing sophisticated coding agents (like Kilocode), the sequence of model calls, file manipulation, and external tool use can become opaque. With vLLora, you can track the exact chain of thought and execution steps, ensuring the agent correctly interprets instructions and uses its tools efficiently, dramatically reducing debugging time for complex logic errors.

2. Debugging Live Voice and Conversational Agents

For agents built on real-time platforms like LiveKit, latency is paramount. vLLora allows you to see the real-time delay introduced by each model inference and tool lookup. This enables you to isolate high-latency steps and fine-tune model choices or tool configurations to deliver a smoother, near-instantaneous user experience.

3. Cost and Performance Auditing

In production environments, agent costs can escalate quickly. By integrating vLLora, you gain visibility into the token consumption and associated cost of every single interaction. This allows teams to enforce budget constraints, identify models that are unnecessarily expensive for low-stakes tasks, and optimize for long-term operational efficiency.

Why Choose vLLora?

When evaluating tools for agent development, vLLora offers distinct advantages centered on ease of use, cost, and comprehensive support:

- Native Integration via API Standard: Unlike solutions requiring proprietary SDKs, vLLora uses the widely adopted OpenAI API standard. This means you can integrate deep observability into mature projects without altering core agent logic or undertaking painful migration efforts.

- Comprehensive Model Flexibility: The ability to bring your own keys and immediately benchmark 300+ models allows for genuine innovation and cost optimization, ensuring you’re not locked into a single provider's ecosystem.

- Accessible Licensing: vLLora is available free for both Personal and Work use, removing financial barriers to adopting best-in-class debugging and tracing capabilities for teams of any size.

Conclusion

vLLora delivers the crucial observability layer required for building reliable, cost-effective, and high-performance AI agents. By providing real-time tracing and deep metrics through a simple, standardized interface, it transforms the agent development process from opaque troubleshooting to clear, instant optimization.

Explore how vLLora can streamline your development workflow and bring clarity to your agent projects today.