What is Model2vec?

Model2Vec is a groundbreaking technique that transforms any Sentence Transformer into a compact, static model. By reducing model size by up to 15x and boosting inference speed by 500x, Model2Vec makes it possible to have high-performing models that are both fast and small. Despite a minor reduction in performance, Model2Vec remains the most efficient static embedding model available, outperforming alternatives like GLoVe and BPEmb.

Key Features:

🛠️ Compact Models: Shrinks Sentence Transformer size by 15x, reducing a 120M parameter model to just 7.5M (30 MB on disk).

⚡ Lightning-Fast Inference: Achieves inference speeds up to 500x faster on CPUs, making large-scale tasks quicker and more eco-friendly.

🎛️ No Data Required: Distillation happens at the token level, eliminating the need for datasets during model creation.

🌍 Multilingual Support: Works seamlessly with any language, allowing for versatile use across different linguistic contexts.

Use Cases:

Rapid Prototyping: Developers can quickly create small, efficient models for testing and deployment without sacrificing performance.

Multilingual NLP Projects: Teams working on multilingual natural language processing tasks can easily switch between languages and vocabularies.

Resource-Constrained Environments: Organizations with limited computational resources can leverage fast and small models to run NLP applications smoothly on CPUs.

Conclusion:

Model2Vec offers an innovative solution for those needing fast, compact, and high-performing Sentence Transformer models. By reducing model size and increasing inference speed significantly, it allows for efficient deployment across various applications without a major drop in performance. Its multilingual capabilities and ease of use further enhance its appeal, making it a go-to choice for NLP practitioners.

FAQs:

How much smaller can Model2Vec make my Sentence Transformer model?

Model2Vec reduces the model size by a factor of 15, turning a 120M parameter model into a 7.5M parameter one.Does using Model2Vec require a dataset for model distillation?

No, Model2Vec distills models at the token level, so no dataset is needed for the distillation process.Can I use Model2Vec for multilingual NLP tasks?

Yes, Model2Vec supports any language, making it ideal for multilingual NLP projects.

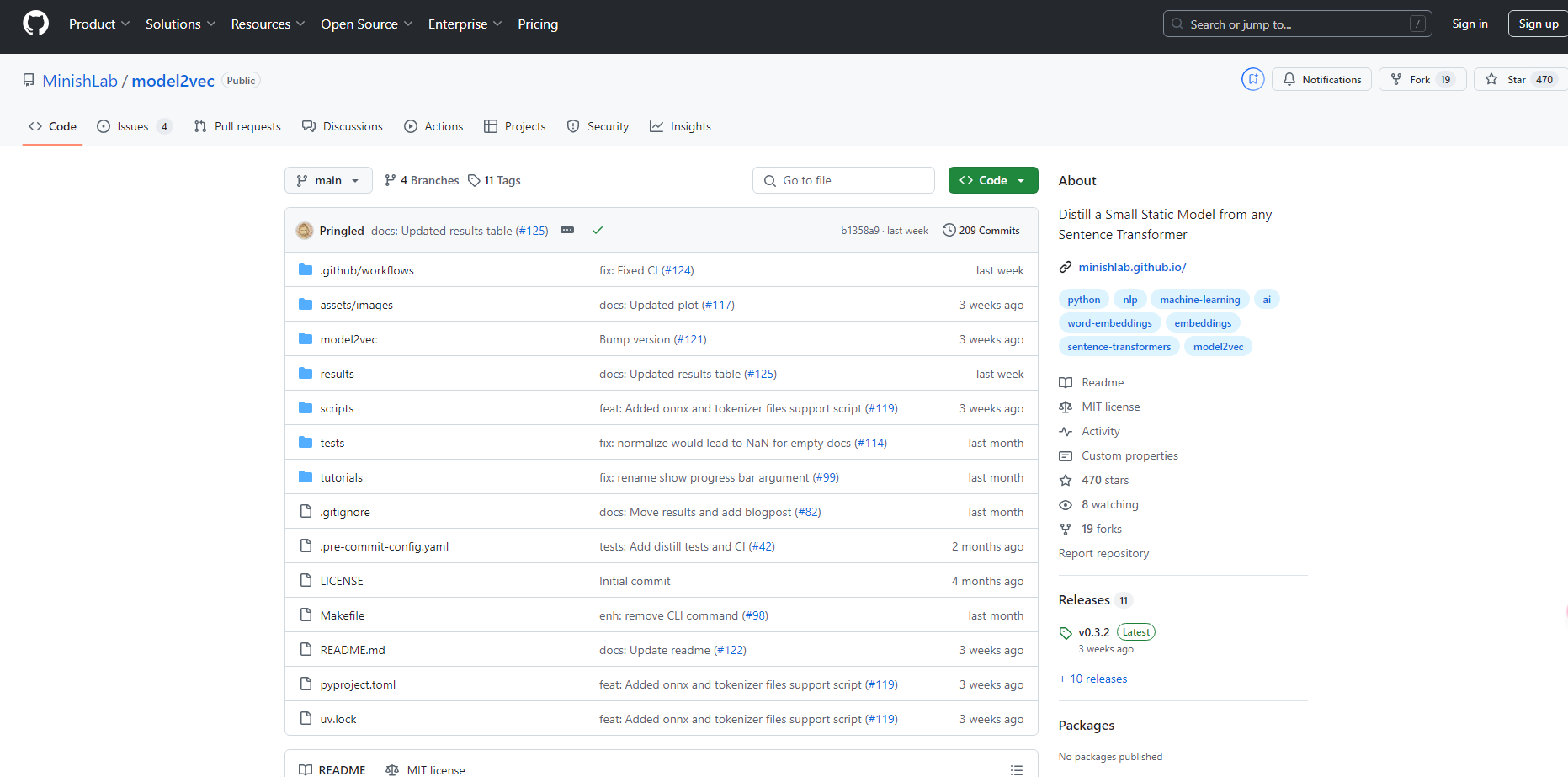

More information on Model2vec

Model2vec Alternatives

Load more Alternatives-

KTransformers, an open - source project by Tsinghua's KVCache.AI team and QuJing Tech, optimizes large - language model inference. It reduces hardware thresholds, runs 671B - parameter models on 24GB - VRAM single - GPUs, boosts inference speed (up to 286 tokens/s pre - processing, 14 tokens/s generation), and is suitable for personal, enterprise, and academic use.

-

-

-

DeepSeek-VL2, a vision - language model by DeepSeek-AI, processes high - res images, offers fast responses with MLA, and excels in diverse visual tasks like VQA and OCR. Ideal for researchers, developers, and BI analysts.

-