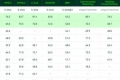

Best GLM-130B Alternatives in 2026

-

GLM-4-9B is the open-source version of the latest generation of pre-trained models in the GLM-4 series launched by Zhipu AI.

-

ChatGLM-6B is an open CN&EN model w/ 6.2B paras (optimized for Chinese QA & dialogue for now).

-

PolyLM, a revolutionary polyglot LLM, supports 18 languages, excels in tasks, and is open-source. Ideal for devs, researchers, and businesses for multilingual needs.

-

GLM-4.5V: Empower your AI with advanced vision. Generate web code from screenshots, automate GUIs, & analyze documents & video with deep reasoning.

-

Unlock the power of YaLM 100B, a GPT-like neural network that generates and processes text with 100 billion parameters. Free for developers and researchers worldwide.

-

OpenBioLLM-8B is an advanced open source language model designed specifically for the biomedical domain.

-

A high-throughput and memory-efficient inference and serving engine for LLMs

-

The New Paradigm of Development Based on MaaS , Unleashing AI with our universal model service

-

OpenBMB: Building a large-scale pre-trained language model center and tools to accelerate training, tuning, and inference of big models with over 10 billion parameters. Join our open-source community and bring big models to everyone.

-

Discover StableLM, an open-source language model by Stability AI. Generate high-performing text and code on personal devices with small and efficient models. Transparent, accessible, and supportive AI technology for developers and researchers.

-

To speed up LLMs' inference and enhance LLM's perceive of key information, compress the prompt and KV-Cache, which achieves up to 20x compression with minimal performance loss.

-

WizardLM-2 8x22B is Microsoft AI's most advanced Wizard model. It demonstrates highly competitive performance compared to leading proprietary models, and it consistently outperforms all existing state-of-the-art opensource models.

-

MiniCPM is an End-Side LLM developed by ModelBest Inc. and TsinghuaNLP, with only 2.4B parameters excluding embeddings (2.7B in total).

-

Explore InternLM2, an AI tool with open-sourced models! Excel in long-context tasks, reasoning, math, code interpretation, and creative writing. Discover its versatile applications and strong tool utilization capabilities for research, application development, and chat interactions. Upgrade your AI landscape with InternLM2.

-

Enhance your NLP capabilities with Baichuan-7B - a groundbreaking model that excels in language processing and text generation. Discover its bilingual capabilities, versatile applications, and impressive performance. Shape the future of human-computer communication with Baichuan-7B.

-

OLMo 2 32B: Open-source LLM rivals GPT-3.5! Free code, data & weights. Research, customize, & build smarter AI.

-

Enhance language models, improve performance, and get accurate results. WizardLM is the ultimate tool for coding, math, and NLP tasks.

-

Technology Innovation Institute has open-sourced Falcon LLM for research and commercial utilization.

-

Qwen2.5 series language models offer enhanced capabilities with larger datasets, more knowledge, better coding and math skills, and closer alignment to human preferences. Open-source and available via API.

-

A Trailblazing Language Model Family for Advanced AI Applications. Explore efficient, open-source models with layer-wise scaling for enhanced accuracy.

-

With a total of 8B parameters, the model surpasses proprietary models such as GPT-4V-1106, Gemini Pro, Qwen-VL-Max and Claude 3 in overall performance.

-

ggml is a tensor library for machine learning to enable large models and high performance on commodity hardware.

-

Discover PaLM 2, Google's advanced language model for reasoning, translation, and coding tasks. Built with responsible AI practices, PaLM 2 excels in multilingual collaboration and specialized code generation.

-

StableLM Zephyr 3B is a new chat model that represents the latest addition to the StableLM series of lightweight Large Language Models (LLMs) from Stability AI.

-

Enhance language models with Giga's on-premise LLM. Powerful infrastructure, OpenAI API compatibility, and data privacy assurance. Contact us now!

-

Ship AI features faster with MegaLLM's unified gateway. Access Claude, GPT-5, Gemini, Llama, and 70+ models through a single API. Built-in analytics, smart fallbacks, and usage tracking included.

-

Eagle 7B : Soaring past Transformers with 1 Trillion Tokens Across 100+ Languages (RWKV-v5)

-

SmolLM is a series of state-of-the-art small language models available in three sizes: 135M, 360M, and 1.7B parameters.

-

Yi Visual Language (Yi-VL) model is the open-source, multimodal version of the Yi Large Language Model (LLM) series, enabling content comprehension, recognition, and multi-round conversations about images.

-

XVERSE-MoE-A36B: A multilingual large language model developed by XVERSE Technology Inc.