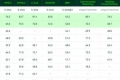

Best Glm-4v-9b Alternatives in 2026

-

ChatGLM-6B is an open CN&EN model w/ 6.2B paras (optimized for Chinese QA & dialogue for now).

-

GLM-4.5V: Empower your AI with advanced vision. Generate web code from screenshots, automate GUIs, & analyze documents & video with deep reasoning.

-

GLM-130B: An Open Bilingual Pre-Trained Model (ICLR 2023)

-

The New Paradigm of Development Based on MaaS , Unleashing AI with our universal model service

-

CogVLM and CogAgent are powerful open-source visual language models that excel in image understanding and multi-turn dialogue.

-

Yi Visual Language (Yi-VL) model is the open-source, multimodal version of the Yi Large Language Model (LLM) series, enabling content comprehension, recognition, and multi-round conversations about images.

-

BuboGPT is an advanced Large Language Model (LLM) that incorporates multi-modal inputs including text, image and audio, with a unique ability to ground its responses to visual objects.

-

PolyLM, a revolutionary polyglot LLM, supports 18 languages, excels in tasks, and is open-source. Ideal for devs, researchers, and businesses for multilingual needs.

-

With a total of 8B parameters, the model surpasses proprietary models such as GPT-4V-1106, Gemini Pro, Qwen-VL-Max and Claude 3 in overall performance.

-

Mini-Gemini supports a series of dense and MoE Large Language Models (LLMs) from 2B to 34B with image understanding, reasoning, and generation simultaneously. We build this repo based on LLaVA.

-

WizardLM-2 8x22B is Microsoft AI's most advanced Wizard model. It demonstrates highly competitive performance compared to leading proprietary models, and it consistently outperforms all existing state-of-the-art opensource models.

-

A high-throughput and memory-efficient inference and serving engine for LLMs

-

Qwen2-VL is the multimodal large language model series developed by Qwen team, Alibaba Cloud.

-

C4AI Aya Vision 8B: Open-source multilingual vision AI for image understanding. OCR, captioning, reasoning in 23 languages.

-

Enhance vision-language understanding with MiniGPT-4. Generate image descriptions, create websites, identify humor elements, and more! Discover its versatile capabilities.

-

OpenBioLLM-8B is an advanced open source language model designed specifically for the biomedical domain.

-

Discover the power of GPT4V.net, offering advanced conversation services and multimodal capabilities for seamless browsing. Try it for free!

-

Unlock the power of YaLM 100B, a GPT-like neural network that generates and processes text with 100 billion parameters. Free for developers and researchers worldwide.

-

BAGEL: Open-source multimodal AI from ByteDance-Seed. Understands, generates, edits images & text. Powerful, flexible, comparable to GPT-4o. Build advanced AI apps.

-

A Gradio web UI for Large Language Models. Supports transformers, GPTQ, llama.cpp (GGUF), Llama models.

-

CM3leon: A versatile multimodal generative model for text and images. Enhance creativity and create realistic visuals for gaming, social media, and e-commerce.

-

GPT-4o (“o” for “omni”) is a step towards much more natural human-computer interaction—it accepts as input any combination of text, audio, and image and generates any combination of text, audio, and image outputs

-

DeepSeek LLM, an advanced language model comprising 67 billion parameters. It has been trained from scratch on a vast dataset of 2 trillion tokens in both English and Chinese.

-

Unlock the power of large language models with 04-x. Enhanced privacy, seamless integration, and a user-friendly interface for language learning, creative writing, and technical problem-solving.

-

A novel Multimodal Large Language Model (MLLM) architecture, designed to structurally align visual and textual embeddings.

-

To speed up LLMs' inference and enhance LLM's perceive of key information, compress the prompt and KV-Cache, which achieves up to 20x compression with minimal performance loss.

-

Discover StableLM, an open-source language model by Stability AI. Generate high-performing text and code on personal devices with small and efficient models. Transparent, accessible, and supportive AI technology for developers and researchers.

-

Qwen2.5 series language models offer enhanced capabilities with larger datasets, more knowledge, better coding and math skills, and closer alignment to human preferences. Open-source and available via API.

-

Cambrian-1 is a family of multimodal LLMs with a vision-centric design.

-

XVERSE-MoE-A36B: A multilingual large language model developed by XVERSE Technology Inc.