What is ModelBench?

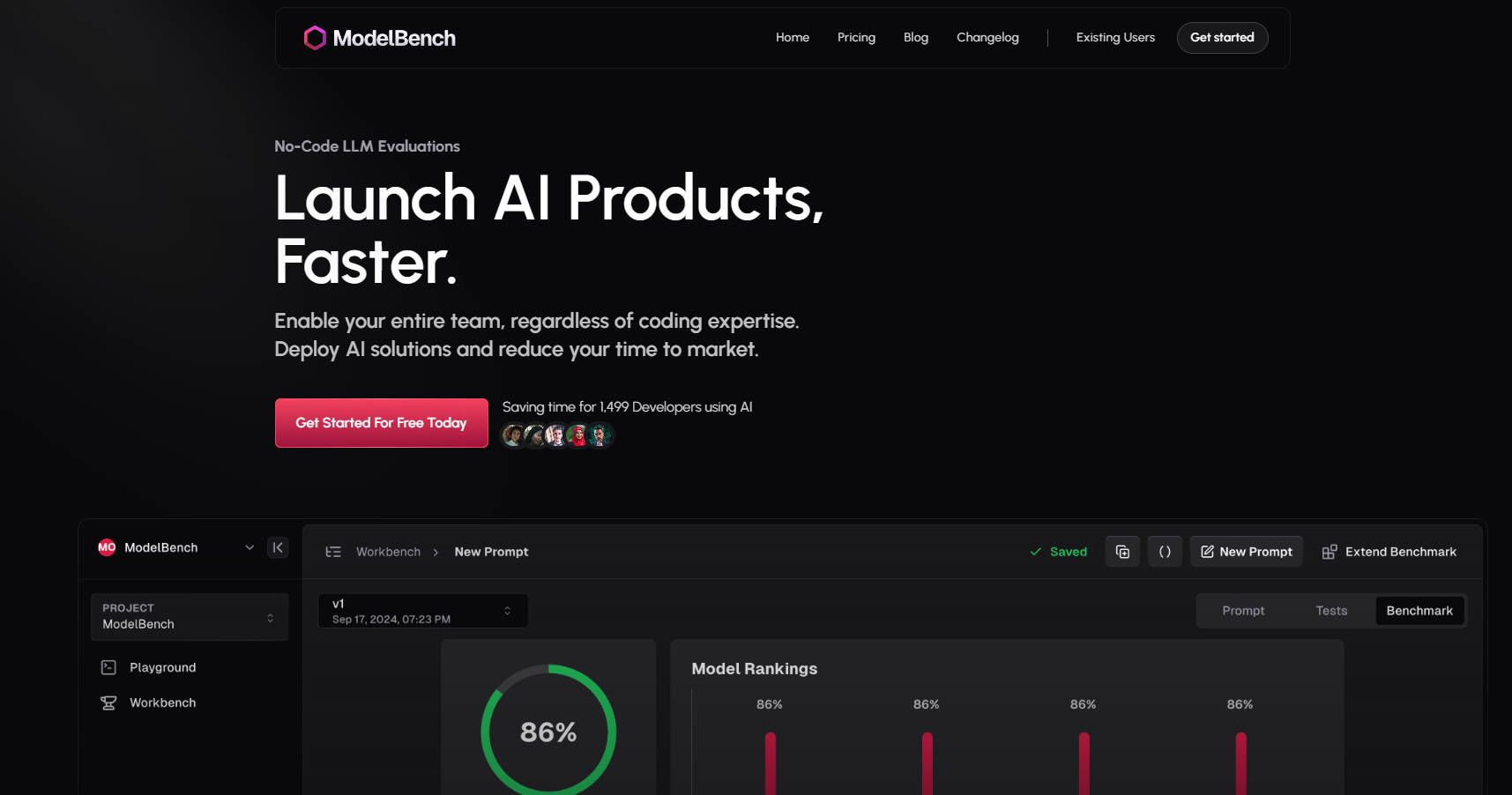

ModelBench is your all-in-one platform for building, testing, and deploying AI solutions faster. Whether you're a product manager, prompt engineer, or developer, ModelBench empowers your team to experiment, compare, and optimize large language models (LLMs) without the hassle of coding.

Why ModelBench?

Save Time:Compare 180+ LLMs side-by-side and identify the best-performing models and prompts in minutes.

No-Code Simplicity:Enable your entire team to experiment and iterate, regardless of technical expertise.

Faster Deployment:Slash development and testing time, reducing your time to market.

Key Features🚀

✅ Compare 180+ Models Side-by-Side

Test and evaluate multiple LLMs simultaneously to find the perfect fit for your use case.

✅ Craft & Fine-Tune Prompts

Design, refine, and test prompts with immediate feedback from multiple models.

✅ Dynamic Inputs for Scalable Testing

Import datasets from tools like Google Sheets and test prompts across countless scenarios.

✅ Benchmark with Humans or AI

Run evaluations using AI, human reviewers, or a mix of both for reliable results.

✅ Trace & Replay LLM Runs

Monitor interactions, replay responses, and detect low-quality outputs with no-code integrations.

✅ Collaborate with Your Team

Share prompts, results, and benchmarks seamlessly to accelerate development.

How ModelBench Works

Playground:

Compare 180+ models in real-time.

Test prompts and integrate custom tools effortlessly.

Workbench:

Turn experiments into structured benchmarks.

Test prompts at scale with dynamic inputs and versioning.

Benchmarking:

Run multiple rounds of tests across models.

Analyze results to refine and improve your prompts.

Who Is ModelBench For?

Product Managers:Quickly validate AI solutions and reduce time to market.

Prompt Engineers:Fine-tune prompts and benchmark performance across models.

Developers:Experiment with LLMs without complex coding or frameworks.

Use Cases

E-Commerce Chatbots:Test and optimize prompts for customer support across multiple LLMs.

Content Generation:Compare models to find the best fit for generating high-quality, on-brand content.

AI-Powered Tools:Benchmark LLMs for tasks like summarization, translation, or sentiment analysis.

Get Started Today

Join 1,499 developers and teams from companies like Amazon, Google, and Twitch who are already saving time with ModelBench.

More information on ModelBench

Top 5 Countries

Traffic Sources

ModelBench Alternatives

Load more Alternatives-

Evaluate Large Language Models easily with PromptBench. Assess performance, enhance model capabilities, and test robustness against adversarial prompts.

-

PromptTools is an open-source platform that helps developers build, monitor, and improve LLM applications through experimentation, evaluation, and feedback.

-

BenchLLM: Evaluate LLM responses, build test suites, automate evaluations. Enhance AI-driven systems with comprehensive performance assessments.

-

WildBench is an advanced benchmarking tool that evaluates LLMs on a diverse set of real-world tasks. It's essential for those looking to enhance AI performance and understand model limitations in practical scenarios.

-

PromptBuilder delivers expert-level LLM results consistently. Optimize prompts for ChatGPT, Claude & Gemini in seconds.