What is Felafax?

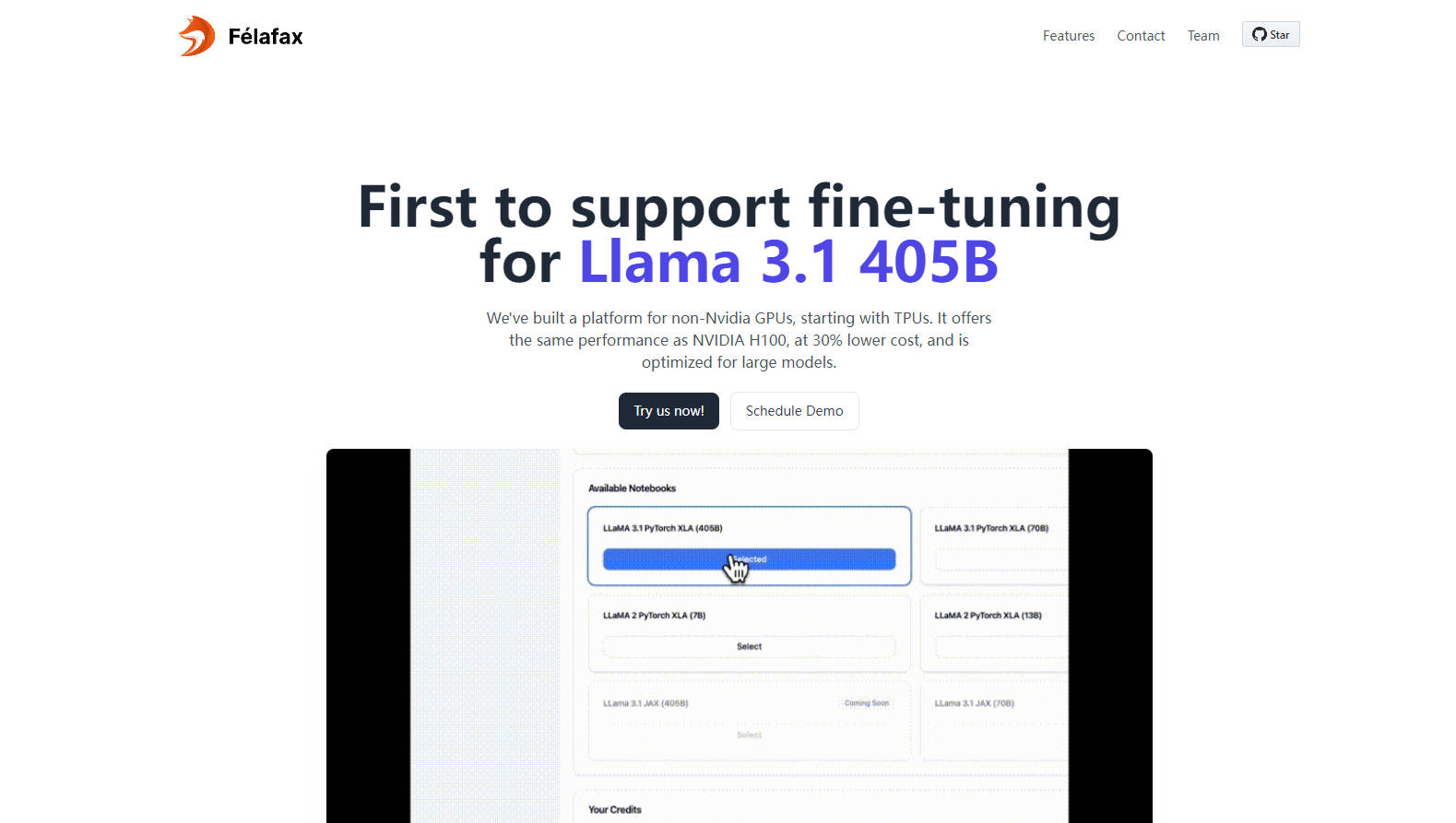

Felafax disrupts the AI training landscape by optimizing Llama 3.1 405B model fine-tuning on non-NVIDIA GPUs, particularly TPUs, at a significantly lower cost. This cutting-edge platform streamlines the setup and management of large-scale training clusters, leveraging a custom XLA architecture to match NVIDIA H100 performance with a 30% reduction in expenses. Tailored for enterprises and startups, Felafax introduces effortless orchestration for complex multi-GPU tasks, enhanced by pre-configured environments and the promise of forthcoming JAX implementation for even greater efficiency.

Key Features:

One-Click Large Training Clusters- Instantly create scalable training clusters from 8 to 1024 non-NVIDIA GPU chips, with seamless orchestration for any model size.

Unbeatable Performance & Cost-Efficiency- Utilizing a custom non-CUDA XLA framework, Felafax delivers NVIDIA H100 equivalent performance at a 30% cost reduction, ideal for large models.

Full Customization & Control- Access fully customizable Jupyter notebook environments for tailored training runs, ensuring complete control and zero compromises.

Advanced Model Partitioning & Orchestration- Optimized for Llama 3.1 405B, Felafax manages model partitioning, distributed checkpoints, and multi-controller training for unparalleled ease.

Pre-configured Environments & Coming JAX Integration- Choose between Pytorch XLA or JAX with all dependencies pre-installed, ready for immediate use, and anticipate 25% faster training with JAX implementation.

Use Cases:

Entrepreneurial Machine Learning Projects: Startups embarking on AI projects can now achieve state-of-the-art results on TPUs without the overhead or expense of NVIDIA hardware.

Academic Institutions: Universities gain a cost-effective solution for high-performance computing needs, empowering research and education in complex AI models.

Enterprises Scaling AI: Multinational corporations can optimize AI development and deployment by harnessing affordable, high-capacity infrastructure for their Llama 3.1 405B model fine-tuning needs.

Conclusion:

Felafax stands as a beacon for the AI community, pioneering cost-effective, high-performance training on non-NVIDIA GPUs. Whether you're a researcher, a startup, or an enterprise in need of scalable AI solutions, Felafax invites you to experience the future of model fine-tuning today. Discover your $200 credit and start shaping the AI landscape on your terms.

FAQs:

Question: How does Felafax achieve 30% lower cost compared to NVIDIA H100 performance?

Answer: Felafax utilizes a custom, non-CUDA XLA framework that optimizes training on alternative GPUs, such as TPUs, AWS Trainium, AMD GPU, and Intel GPU, ensuring equal performance at significantly reduced costs.

Question: What can I use Felafax for right now?

Answer: Currently, Felafax offers seamless cloud layer setup for AI training clusters, tailored environments for Pytorch XLA and JAX, and simplified fine-tuning for Llama 3.1 models. Stay tuned for JAX implementation coming soon!

Question: Can Felafax handle large models like Llama 405B?

Answer: Yes, Felafax is optimized for large models, including Llama 3.1 405B, managingmodel partitioning, distributed checkpoints,and training orchestration to ease the complexities of multi-GPU tasks.

More information on Felafax

Top 5 Countries

Traffic Sources

Felafax Alternatives

Felafax Alternatives-

Accelerate your AI development with Lambda AI Cloud. Get high-performance GPU compute, pre-configured environments, and transparent pricing.

-

Build powerful AIs quickly with Lepton AI. Simplify development processes, streamline workflows, and manage data securely. Boost your AI projects now!

-

LoRAX (LoRA eXchange) is a framework that allows users to serve thousands of fine-tuned models on a single GPU, dramatically reducing the cost of serving without compromising on throughput or latency.

-

Supercharge your generative AI projects with FriendliAI's PeriFlow. Fastest LLM serving engine, flexible deployment options, trusted by industry leaders.

-

LLaMA Factory is an open-source low-code large model fine-tuning framework that integrates the widely used fine-tuning techniques in the industry and supports zero-code fine-tuning of large models through the Web UI interface.